Illumination-based Data Augmentation for Robust Background Subtraction

Abstract

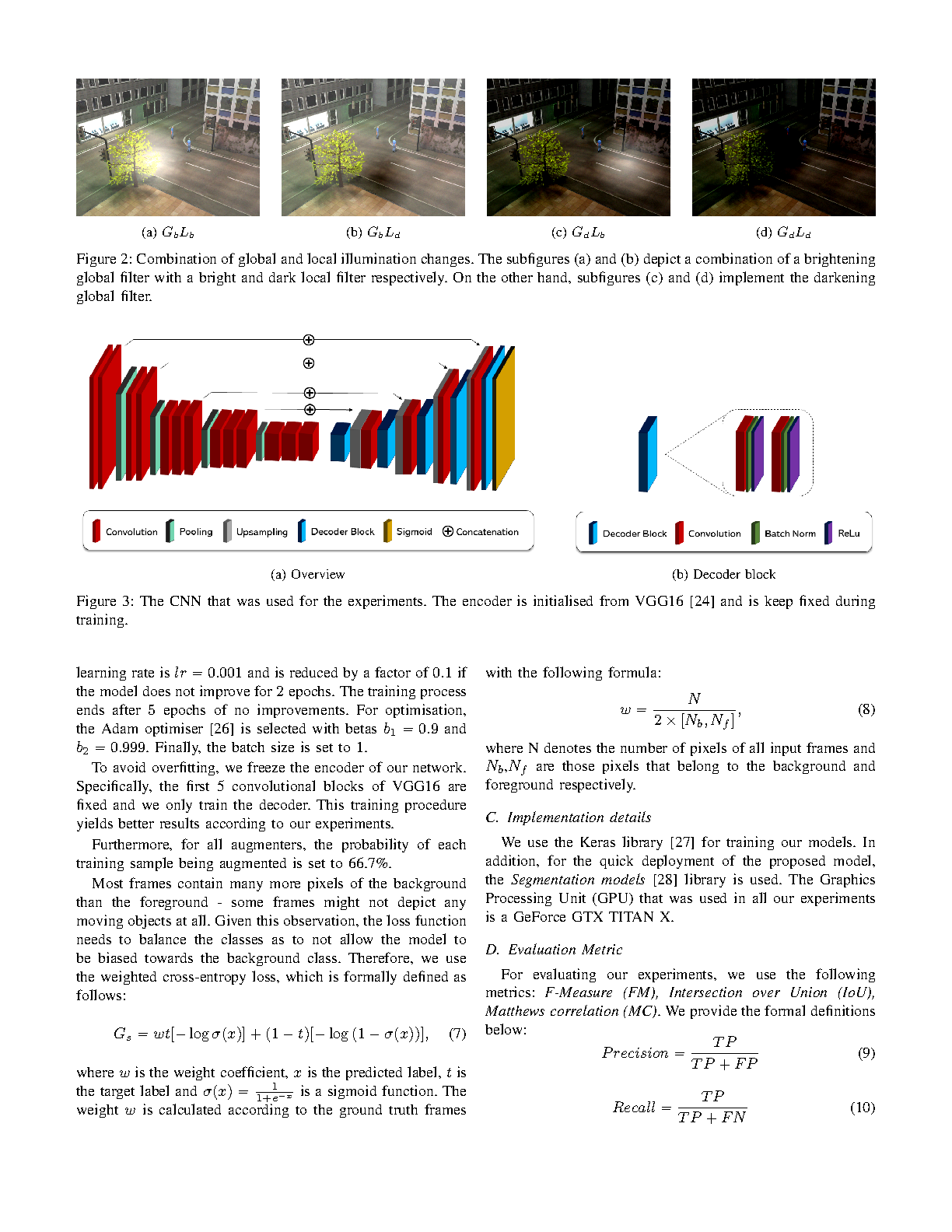

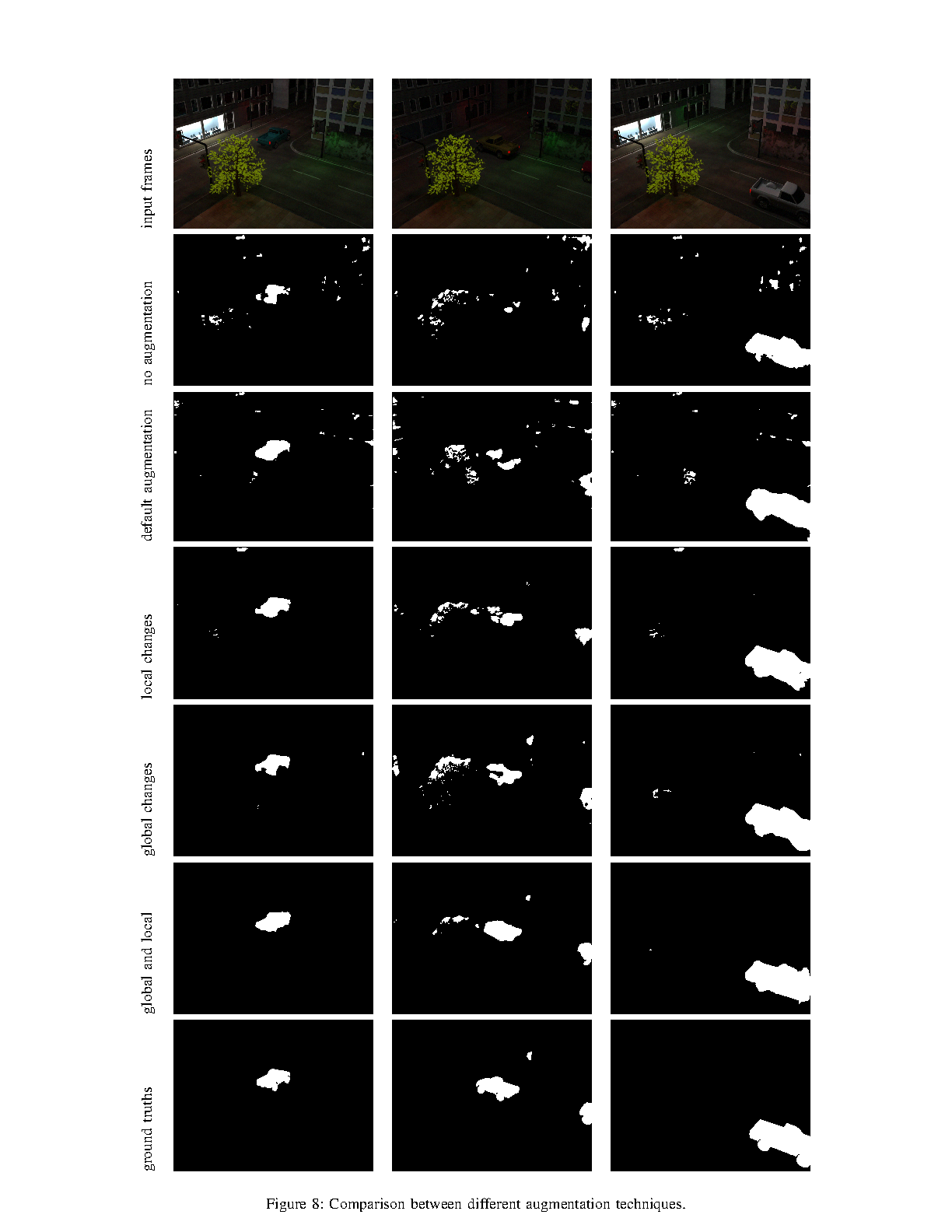

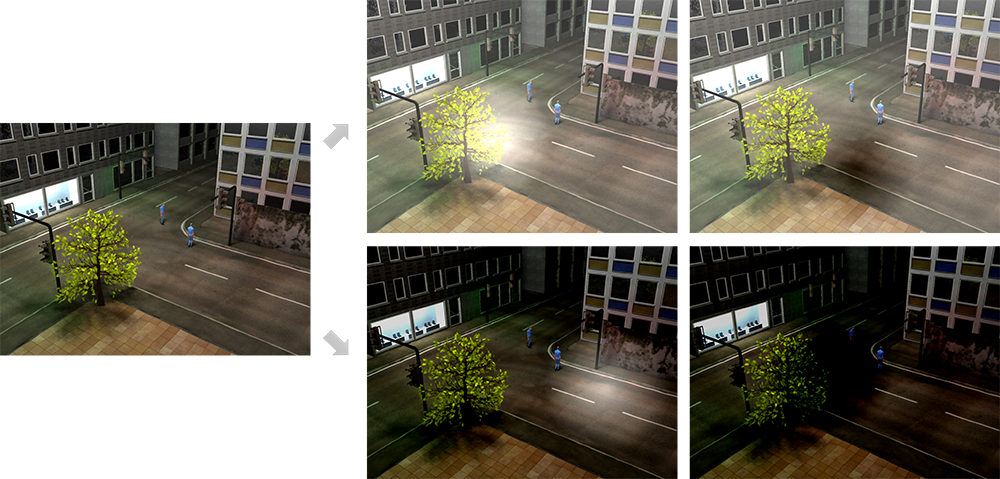

A core challenge in background subtraction (BGS) is handling videos with sudden illumination changes in consecutive frames. In this paper, we tackle the problem from a data point-of-view using data augmentation. Our method performs data augmentation that not only creates endless data on the fly, but also features semantic transformations of illumination which enhance the generalisation of the model. It successfully simulates flashes and shadows by applying the Euclidean distance transform over a binary mask generated randomly. Such data allows us to effectively train an illumination-invariant deep learning model for BGS. Experimental results demonstrate the contribution of the synthetics in the ability of the models to perform BGS even when significant illumination changes take place.

Publication

Illumination-based Data Augmentation for Robust Background Subtraction by Yao Tan, Hubert P. H. Shum, Fei Chao, V. Vijayakumar and Longzhi Yang in 2021

Proceedings of the 2019 International Conference on Software Knowledge Information Management and Applications (SKIMA)

Links and Downloads