Triplet Loss with Channel Attention for Person Re-identification

Abstract

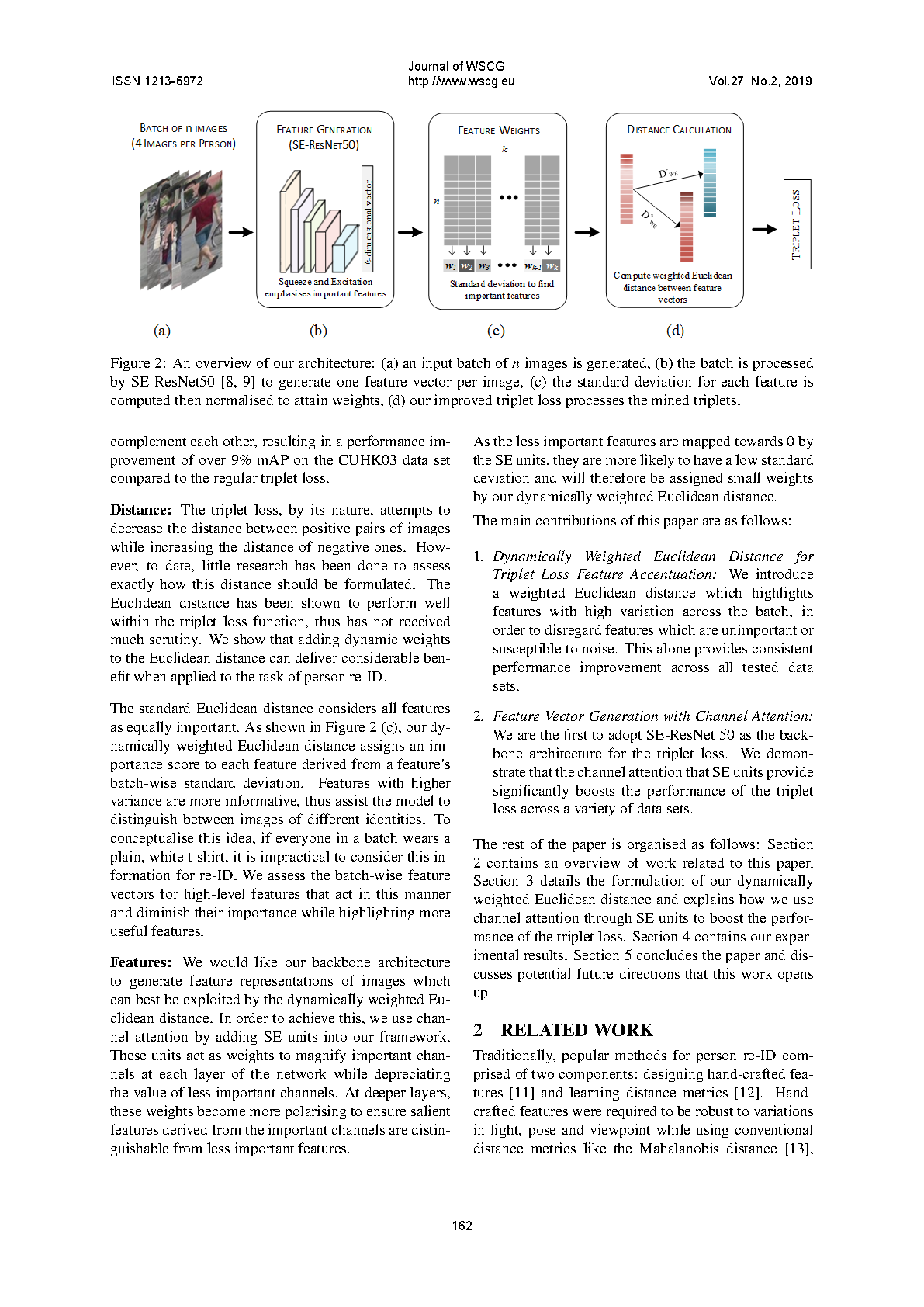

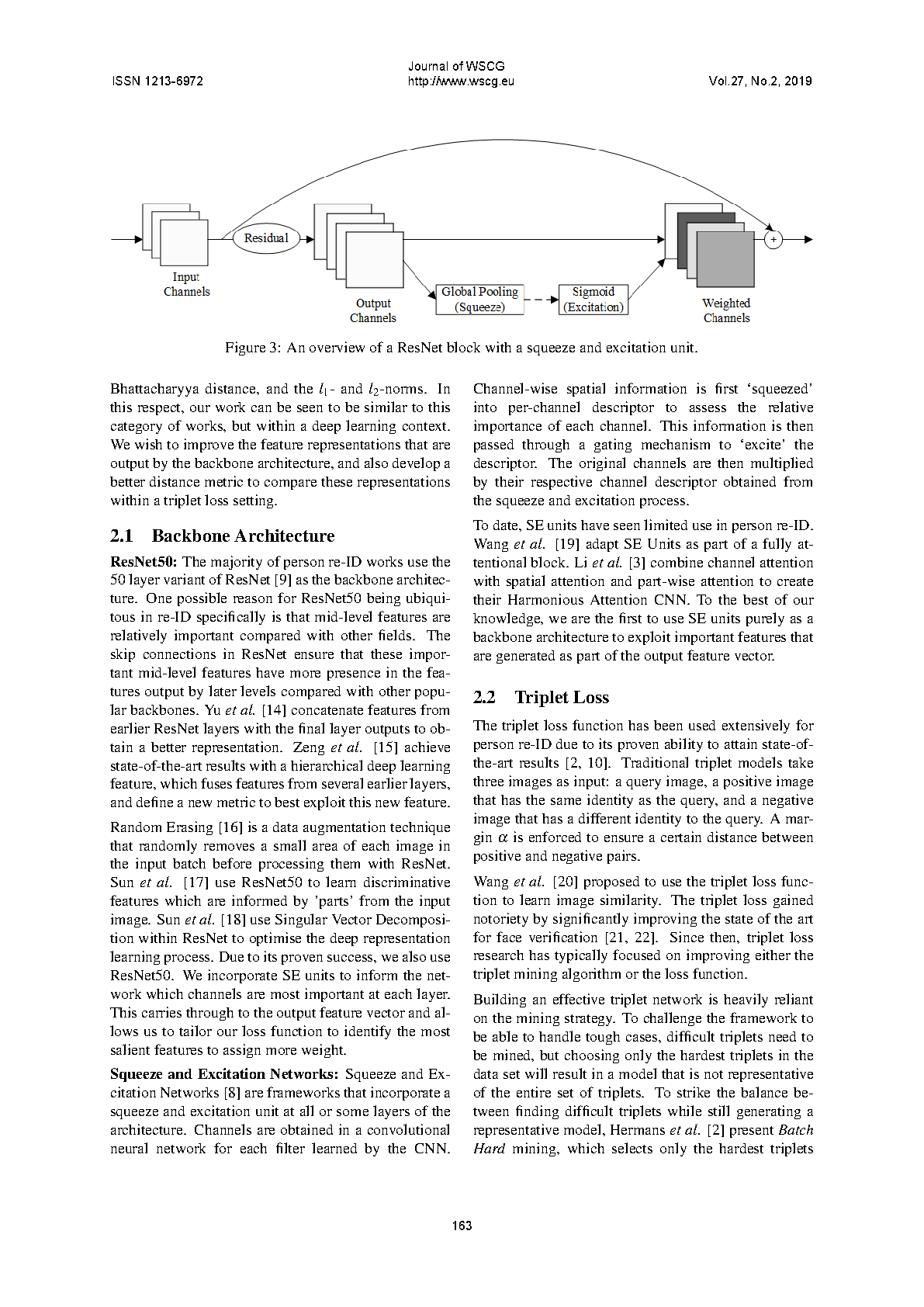

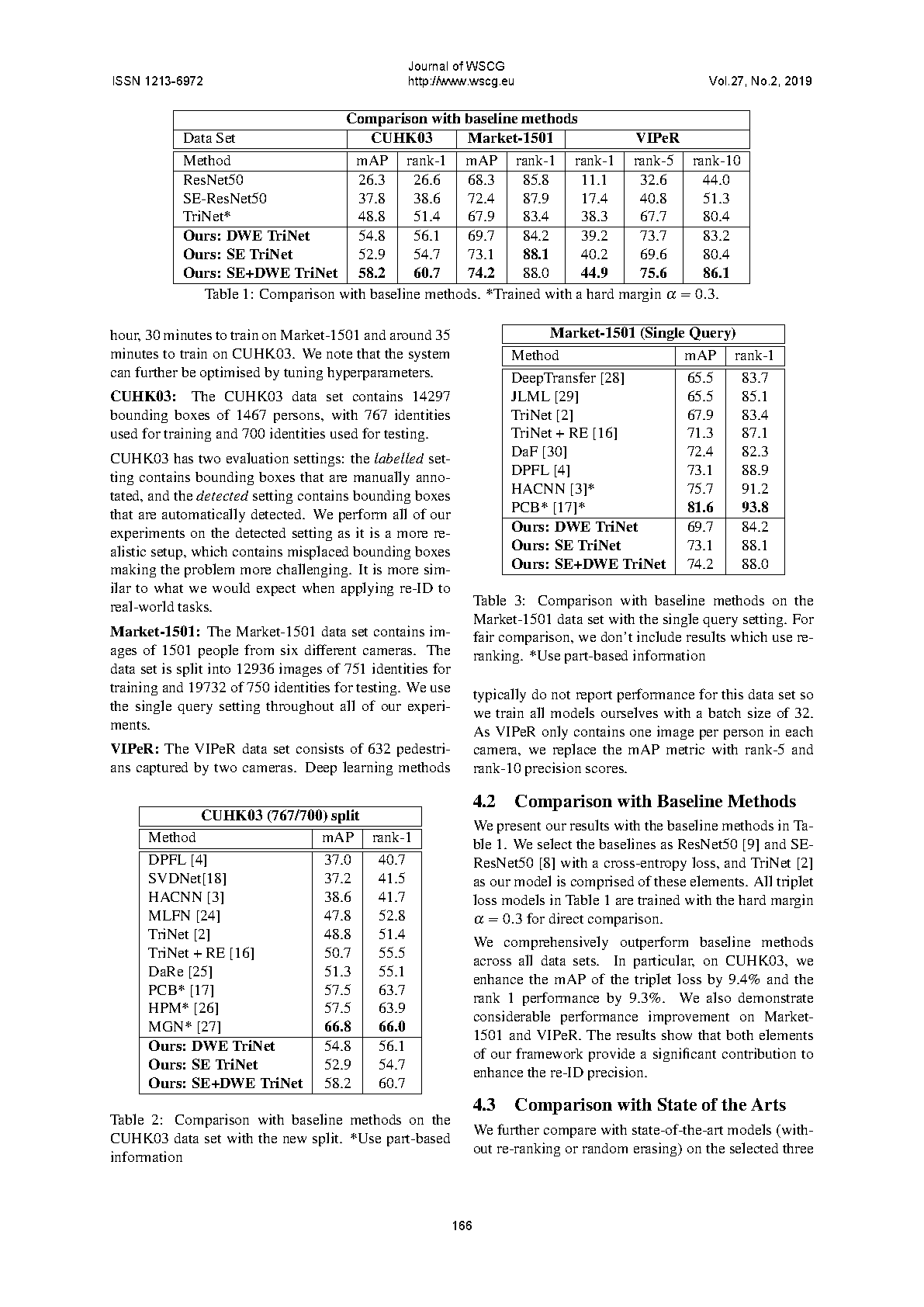

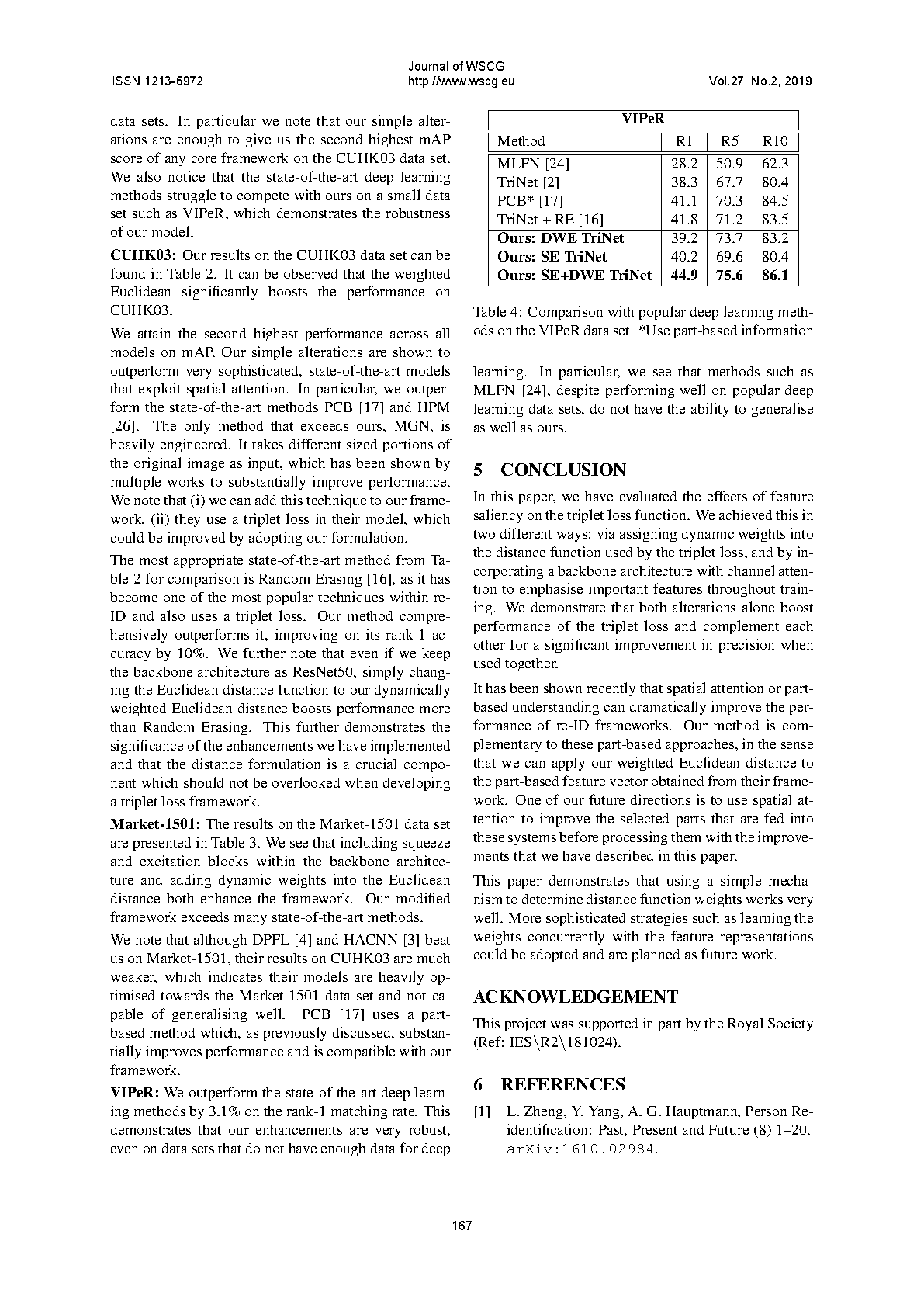

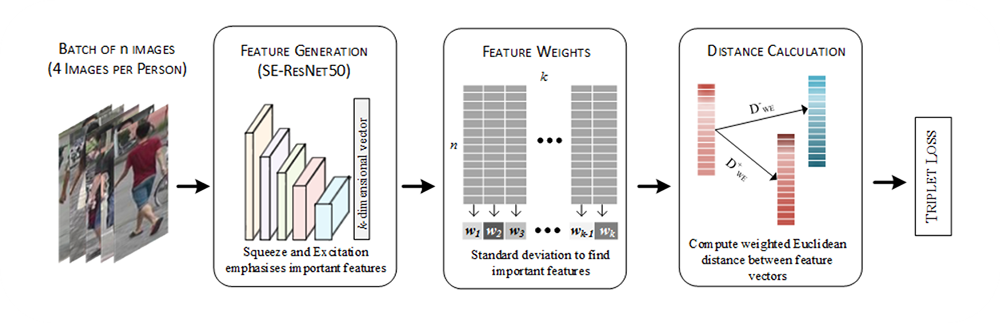

The triplet loss function has seen extensive use within person re-identification. Most works focus on either improving the mining algorithm or adding new terms to the loss function itself. Our work instead concentrates on two other core components of the triplet loss that have been under-researched. First, we improve the standard Euclidean distance with dynamic weights, which are selected based on the standard deviation of features across the batch. Second, we exploit channel attention via a squeeze and excitation unit in the backbone model to emphasise important features throughout all layers of the model. This ensures that the output feature vector is a better representation of the image, and is also more suitable to use within our dynamically weighted Euclidean distance function. We demonstrate that our alterations provide significant performance improvement across popular reidentification data sets, including almost 10% mAP improvement on the CUHK03 data set. The proposed model attains results competitive with many state-of-the-art person re-identification models.

Publication

Triplet Loss with Channel Attention for Person Re-identification by Subhas Barman, Hubert P. H. Shum, Samiran Chattopadhyay and Debasis Samanta in 2021

Journal of WSCG - Proceedings of the 2019 International Conferences in Central Europe on Computer Graphics, Visualization and Computer Vision (WSCG)

Links and Downloads