Action Recognition from Arbitrary Views Using Transferable Dictionary Learning

Abstract

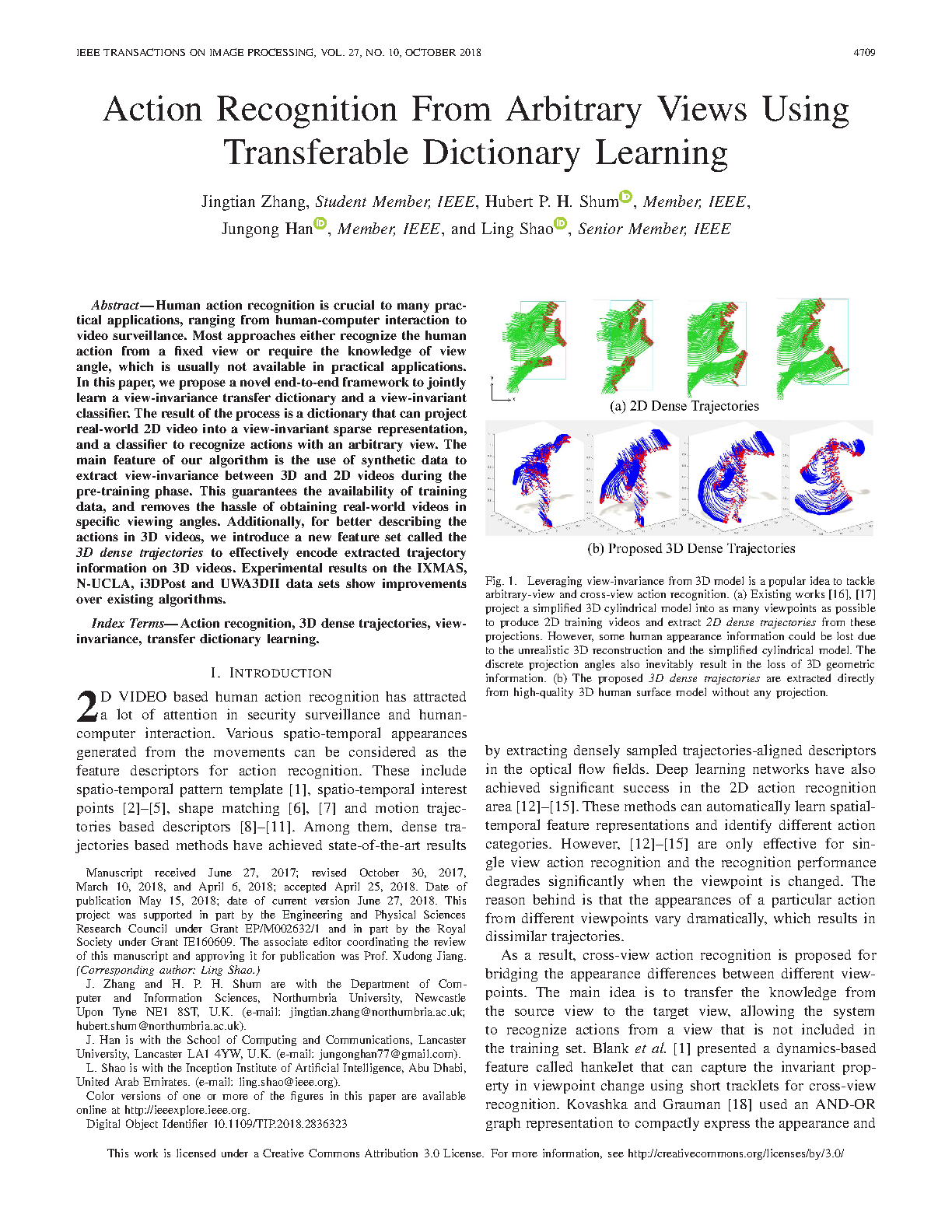

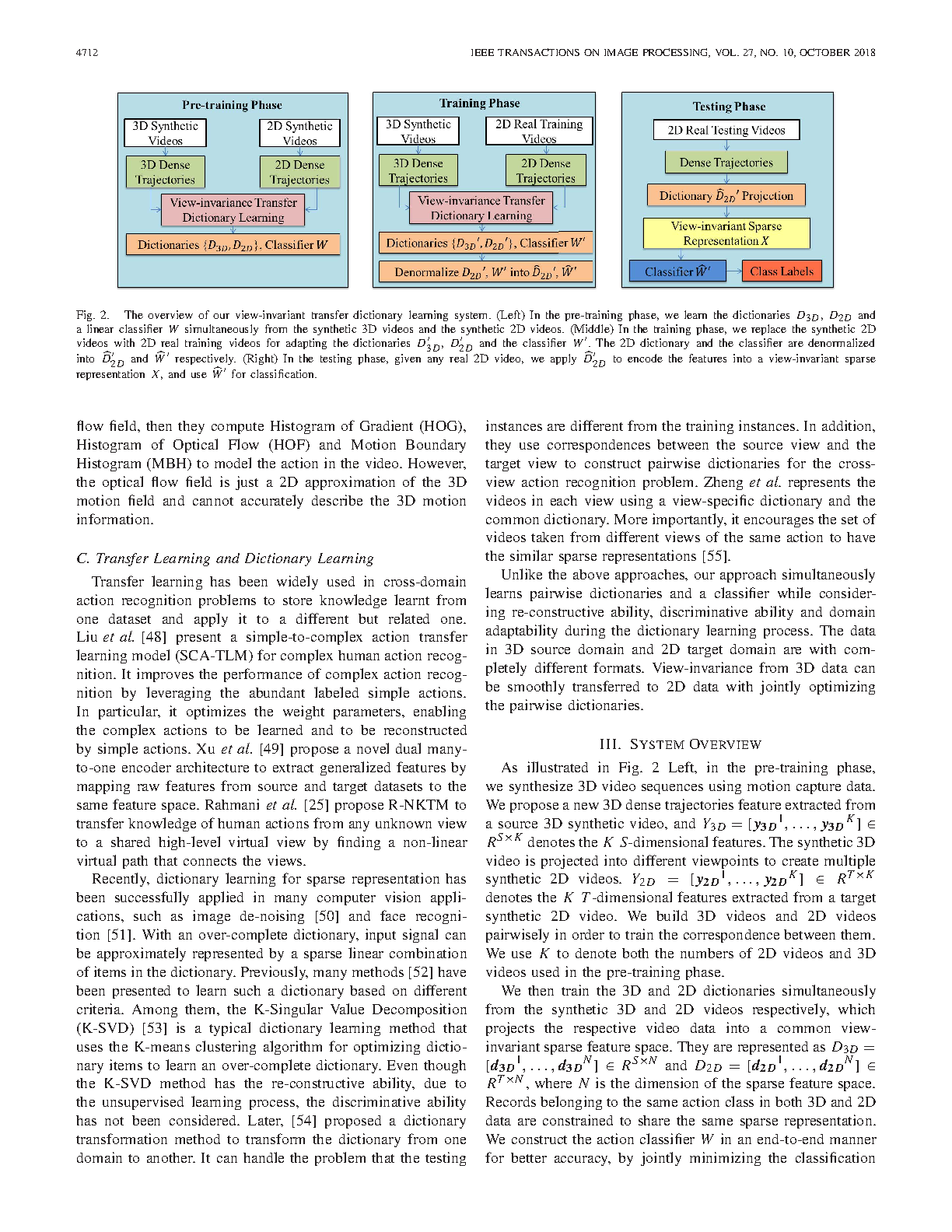

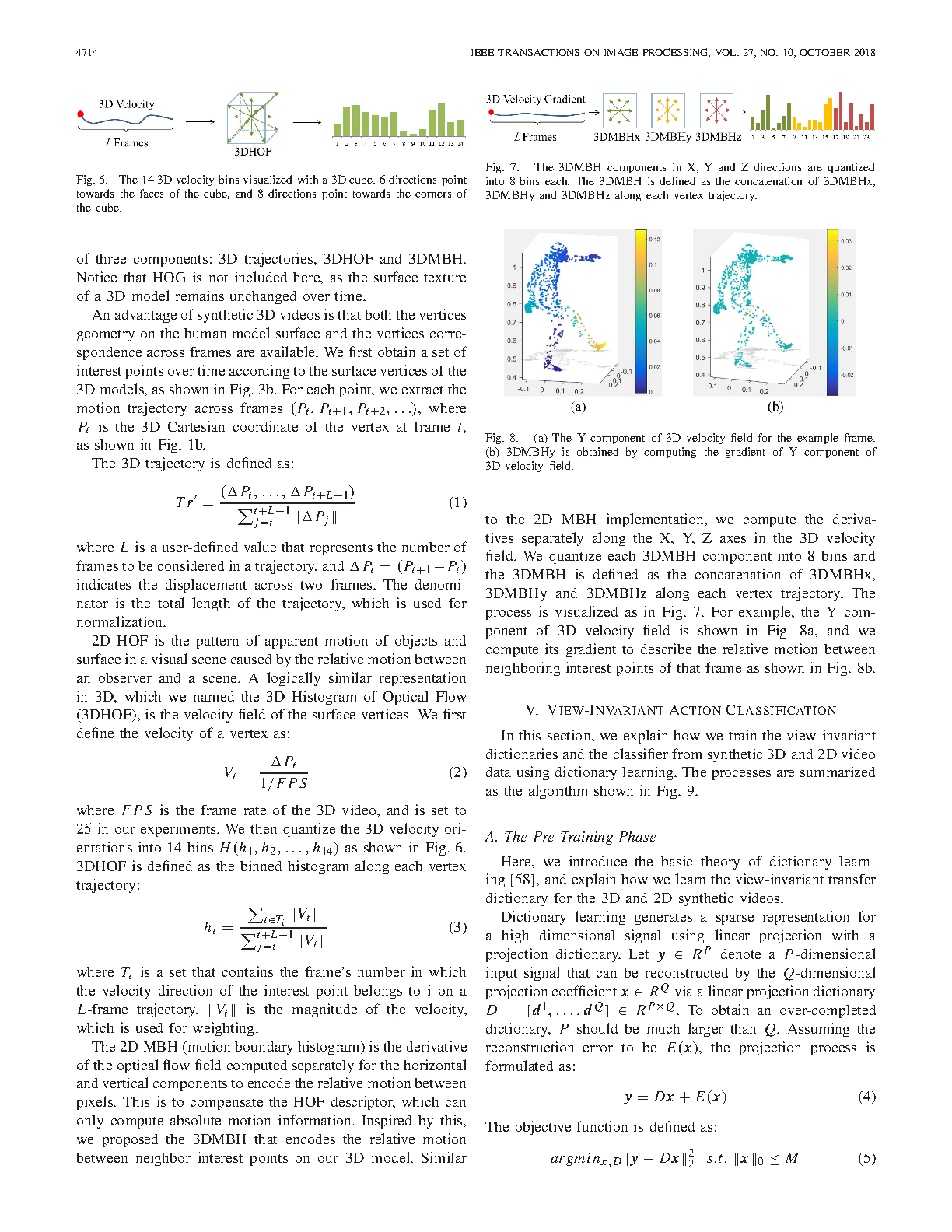

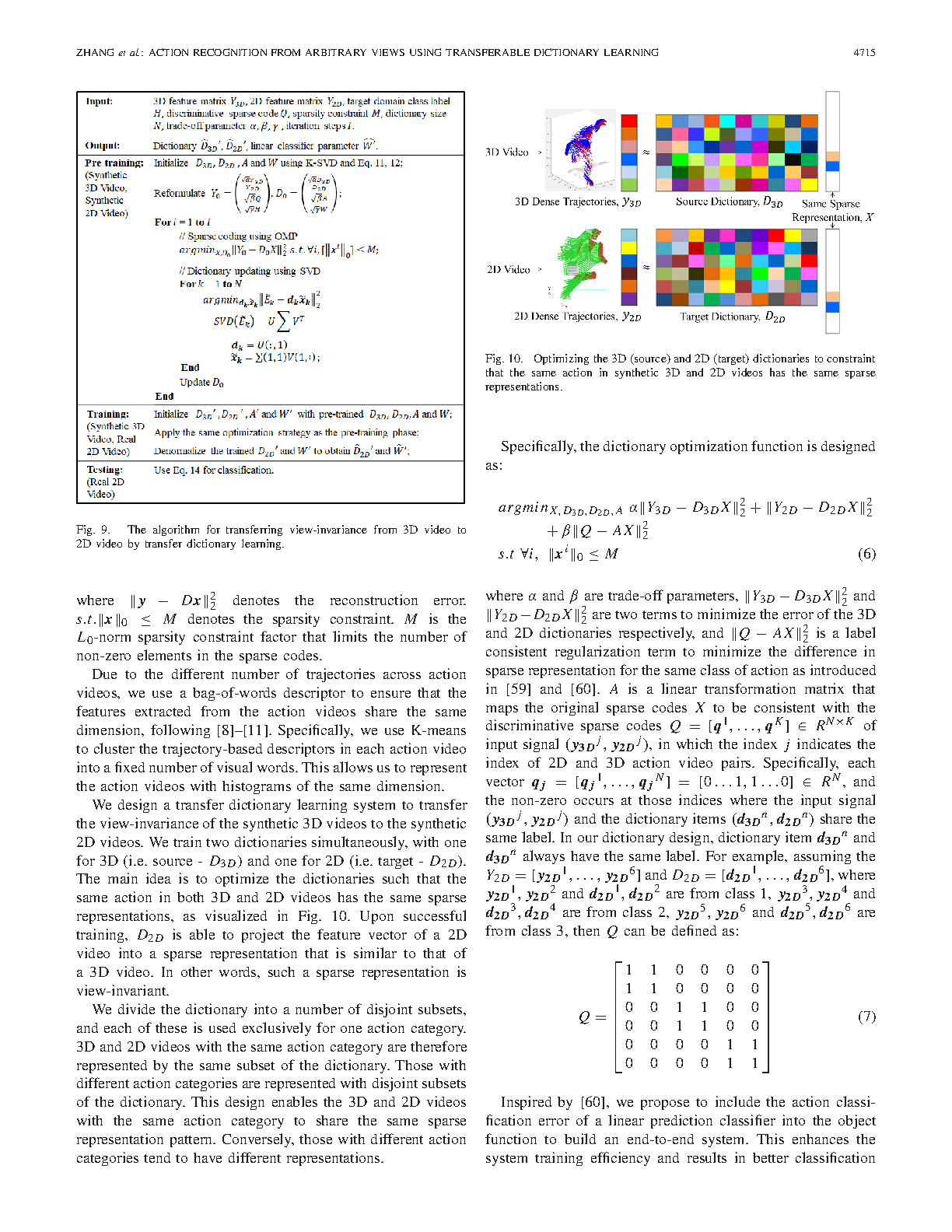

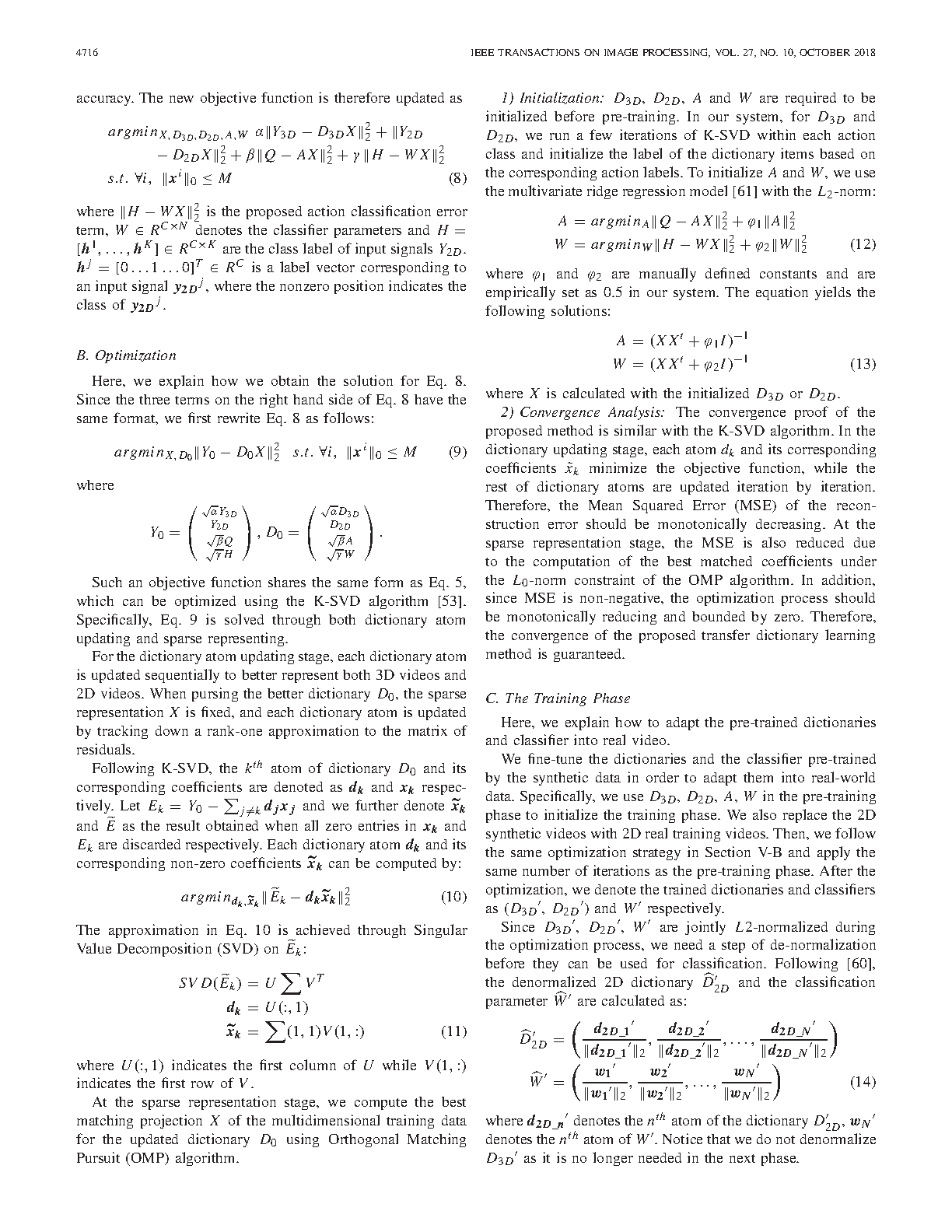

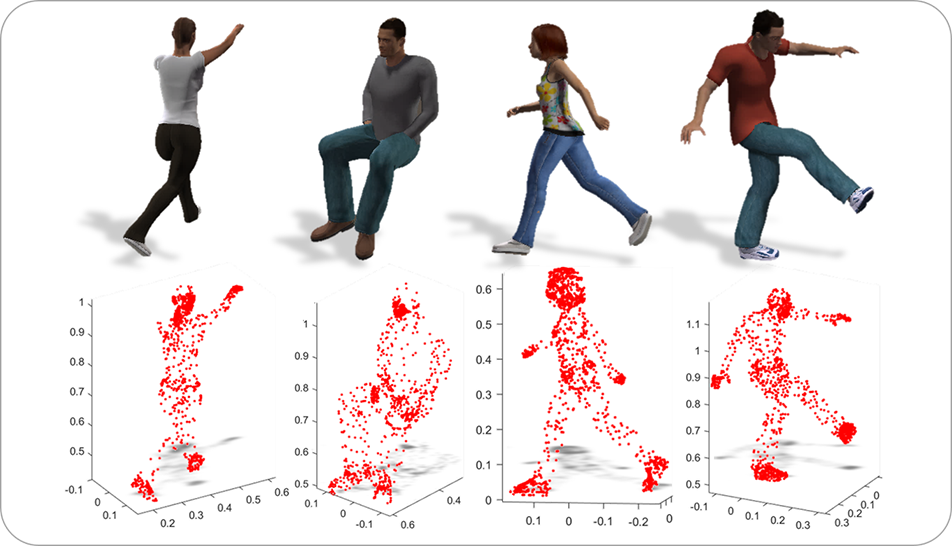

Human action recognition is crucial to many practical applications, ranging from human-computer interaction to video surveillance. Most approaches either recognize the human action from a fixed view or require the knowledge of view angle, which is usually not available in practical applications. In this paper, we propose a novel end-to-end framework to jointly learn a view-invariance transfer dictionary and a view-invariant classifier. The result of the process is a dictionary that can project real-world 2D video into a view-invariant sparse representation, and a classifier to recognize actions with an arbitrary view. The main feature of our algorithm is the use of synthetic data to extract view-invariance between 3D and 2D videos during the pre-training phase. This guarantees the availability of training data, and removes the hassle of obtaining real-world videos in specific viewing angles. Additionally, for better describing the actions in 3D videos, we introduce a new feature set called the3D dense trajectories to effectively encode extracted trajectory information on 3D videos. Experimental results on the IXMAS, N-UCLA, i3DPost and UWA3DII data sets show improvements over existing algorithms.

Publication

Action Recognition from Arbitrary Views Using Transferable Dictionary Learning by Naoki Nozawa, Hubert P. H. Shum, Edmond S. L. Ho and Shigeo Morishima in 2018

IEEE Transactions on Image Processing (TIP)

Links and Downloads