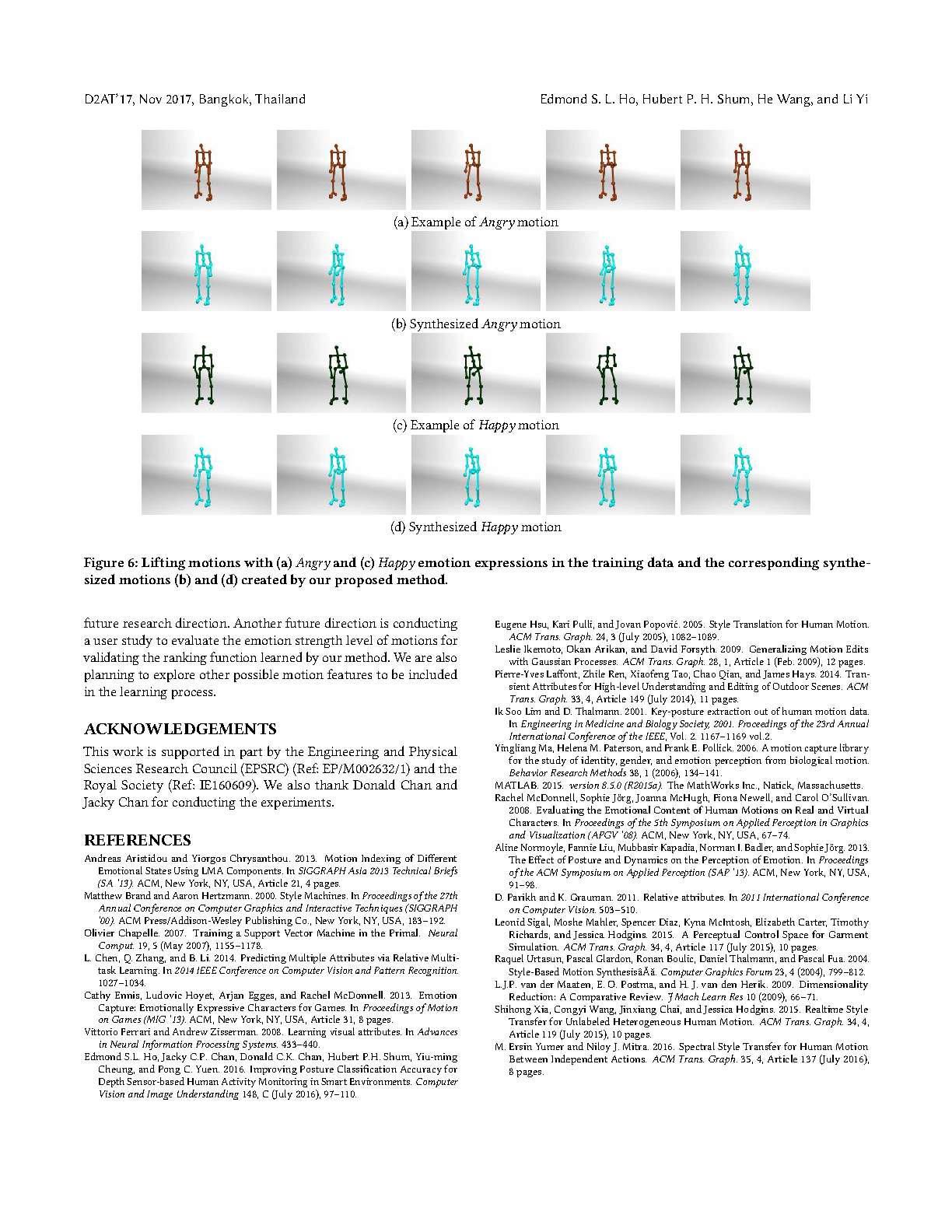

Synthesizing Motion with Relative Emotion Strength

Abstract

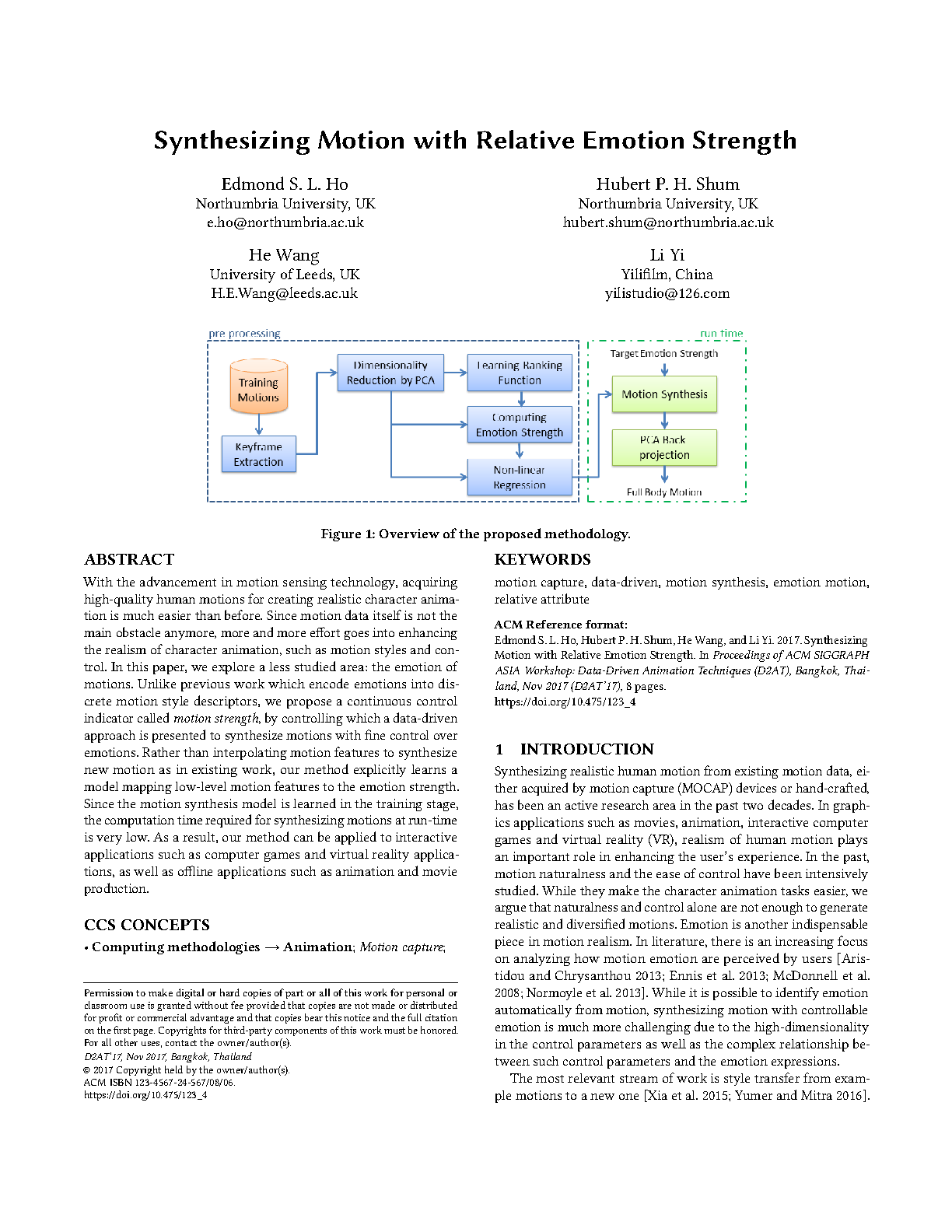

With the advancement in motion sensing technology, acquiring high-quality human motions for creating realistic character animation is much easier than before. Since motion data itself is not the main obstacle anymore, more and more effort goes into enhancing the realism of character animation, such as motion styles and control. In this paper, we explore a less studied area: the emotion of motions. Unlike previous work which encode emotions into discrete motion style descriptors, we propose a continuous control indicator called motion strength, by controlling which a data-driven approach is presented to synthesize motions with fine control over emotions. Rather than interpolating motion features to synthesize new motion as in existing work, our method explicitly learns a model mapping low-level motion features to the emotion strength. Since the motion synthesis model is learned in the training stage, the computation time required for synthesizing motions at run-time is very low. As a result, our method can be applied to interactive applications such as computer games and virtual reality applications, as well as offline applications such as animation and movie production.

Publication

Synthesizing Motion with Relative Emotion Strength by Yijun Shen, He Wang, Edmond S. L. Ho, Longzhi Yang and Hubert P. H. Shum in 2017

Proceedings of the 2017 ACM SIGGRAPH Asia Workshop on Data-Driven Animation Techniques (D2AT)

Links and Downloads