Synthesizing Expressive Facial and Speech Animation by Text-to-IPA Translation with Emotion Control

Abstract

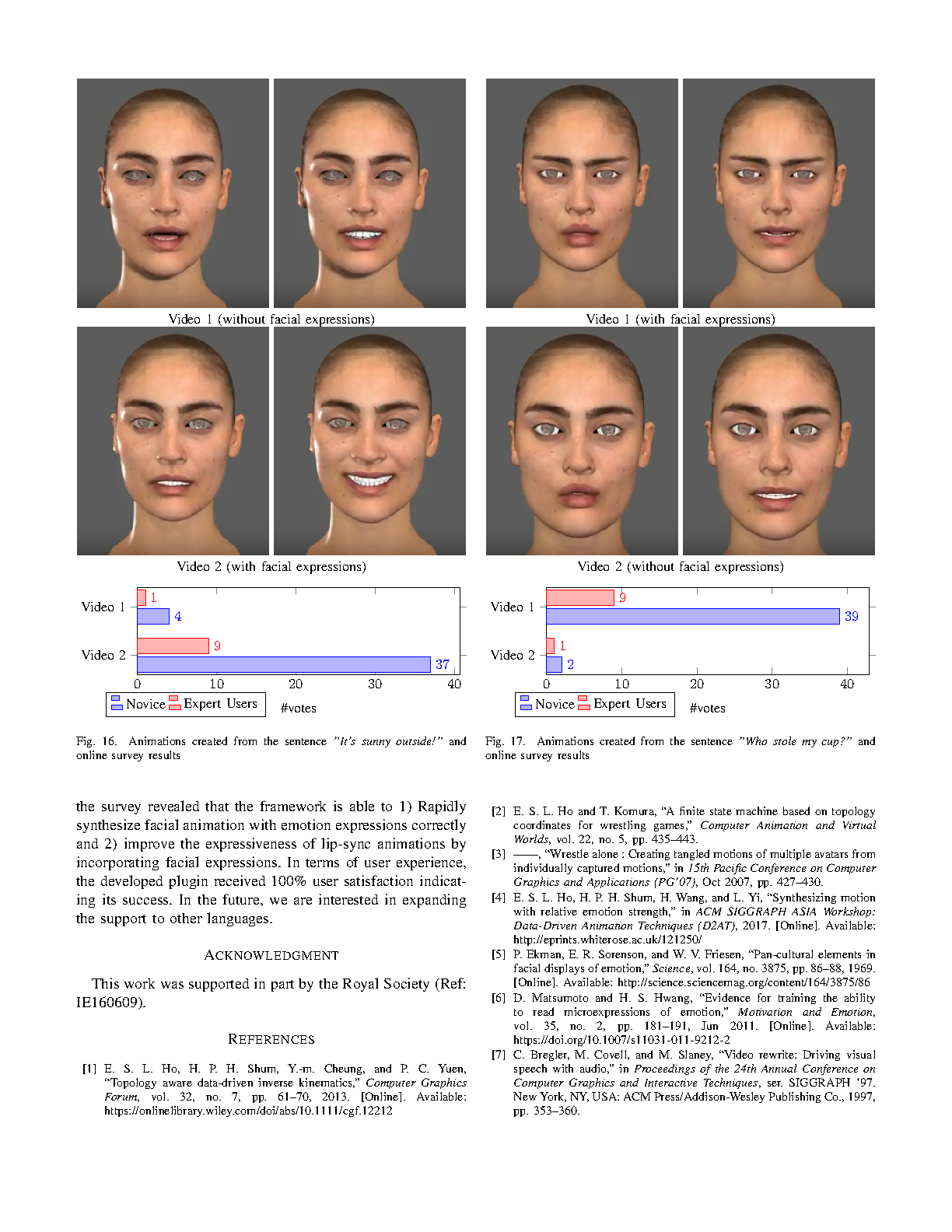

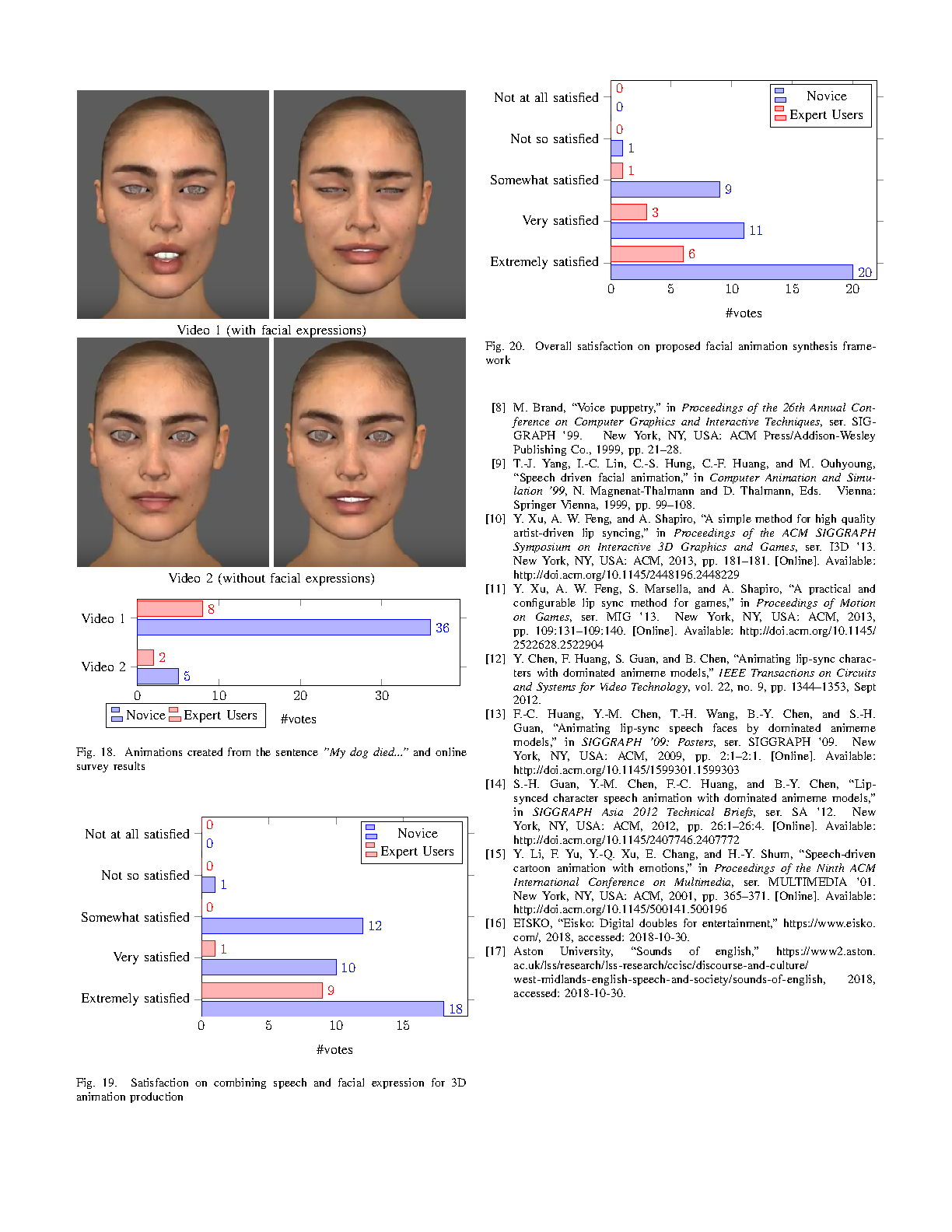

Given the complexity of the human facial anatomy, animating facial expressions and lip movements for speech is a very time-consuming and tedious task. In this paper, a new text-to-animation framework for facial animation synthesis is proposed. The core idea is to improve the expressiveness of lip-sync animation by incorporating facial expressions in 3D animated characters. This idea is realized as a plug-in in Autodesk Maya, one of the most popular animation platforms in the industry, such that professional animators can effectively apply the method in their existing work. We evaluate the proposed system by conducting two sets of surveys, in which both novice and experienced users participate in the user study to provide feedback and evaluations from different perspectives. The results of the survey highlights the effectiveness of creating realistic facial animations with the use of emotion expressions. Video demos of the synthesized animations are available online at https://git.io/fx5U3

Publication

Synthesizing Expressive Facial and Speech Animation by Text-to-IPA Translation with Emotion Control by Yijun Shen, Joseph Henry, He Wang, Edmond S. L. Ho, Taku Komura and Hubert P. H. Shum in 2020

Proceedings of the 2018 International Conference on Software Knowledge Information Management and Applications (SKIMA)

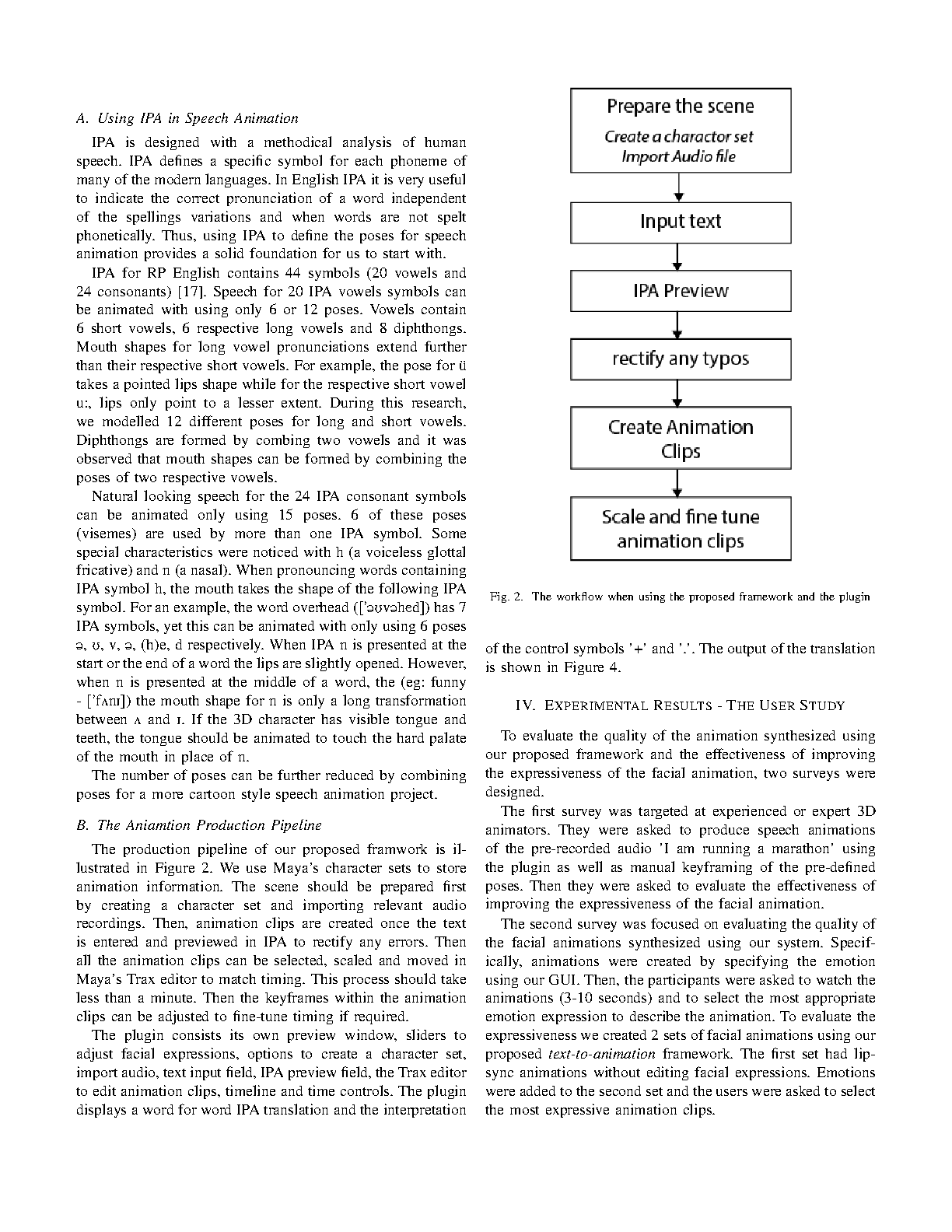

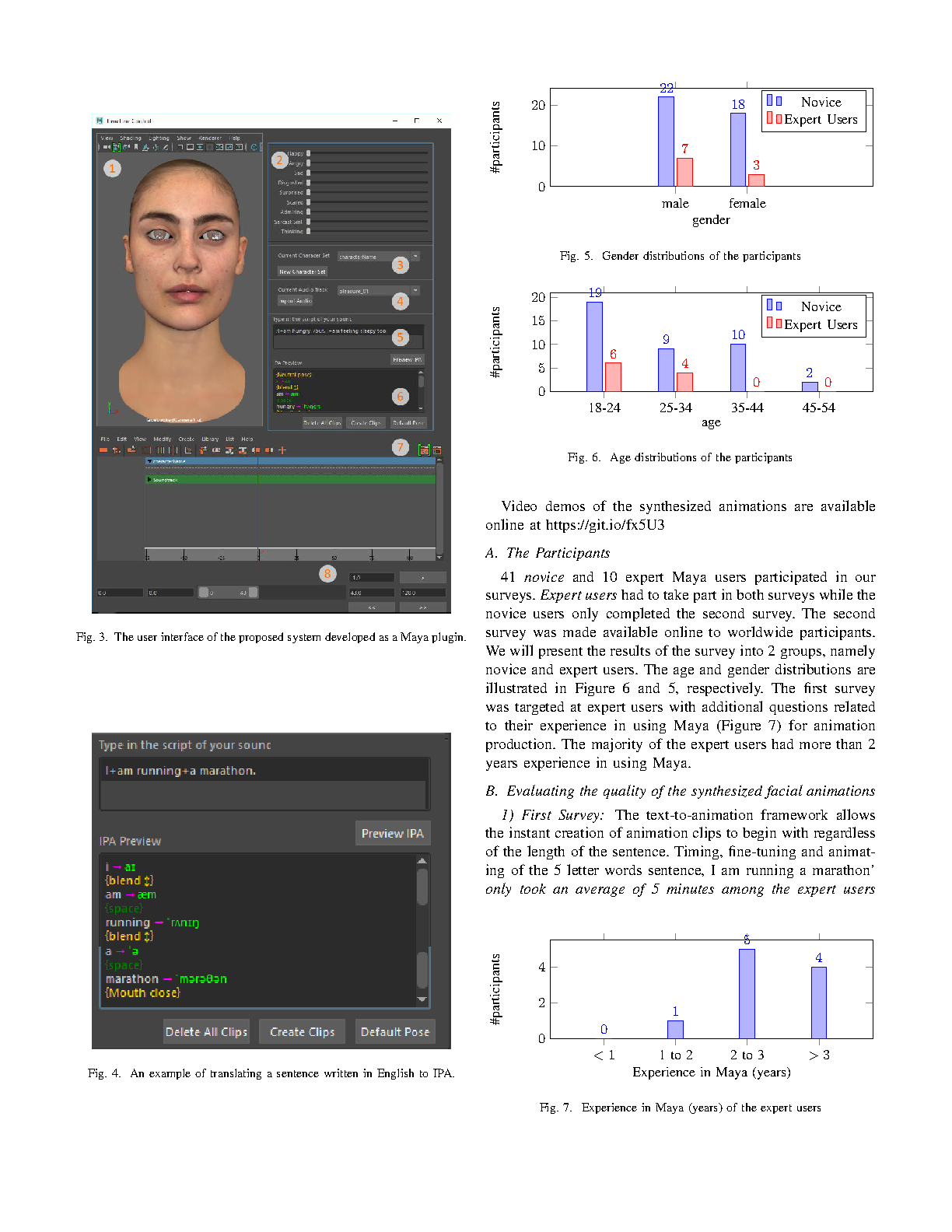

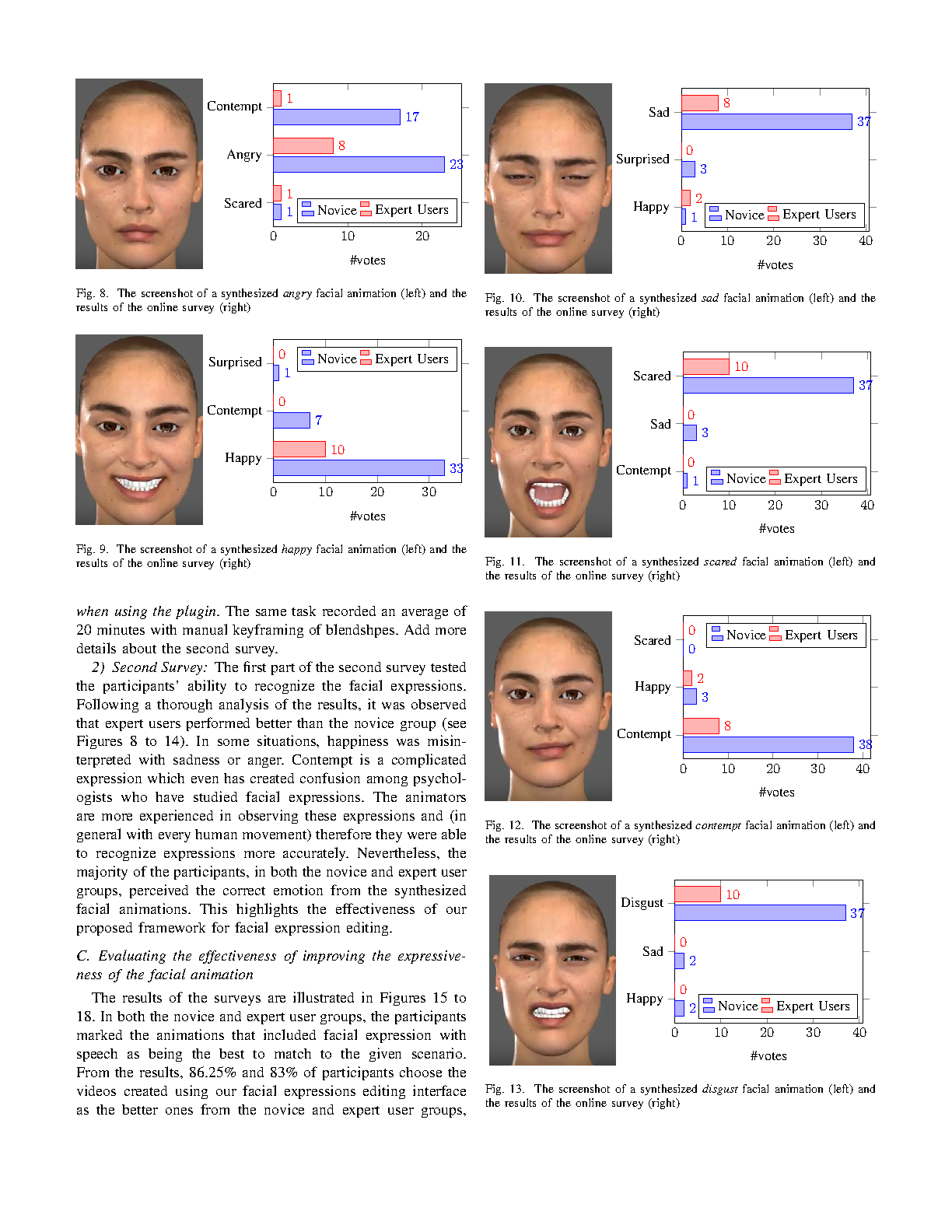

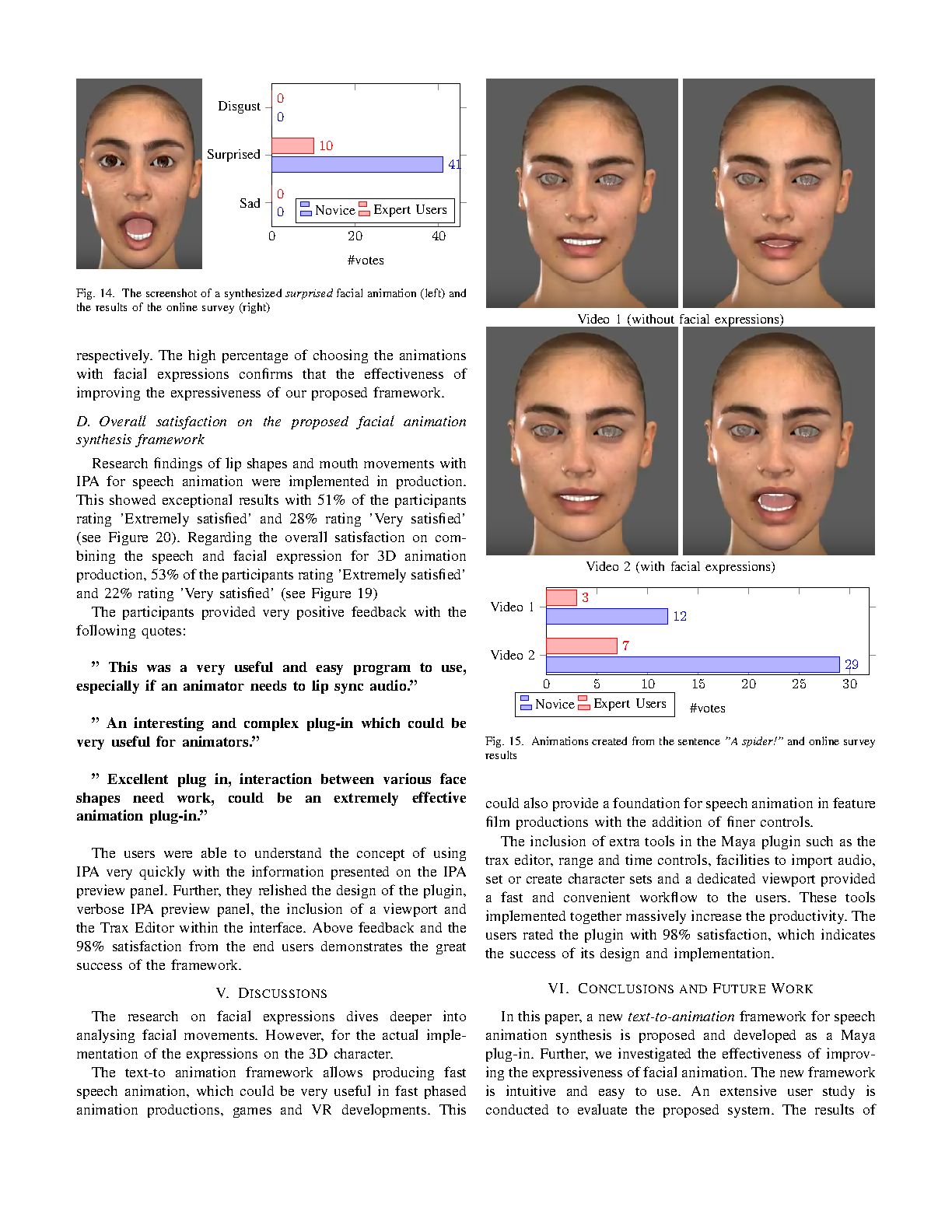

Links and Downloads