Human Action Recognition via Skeletal and Depth based Feature Fusion

Abstract

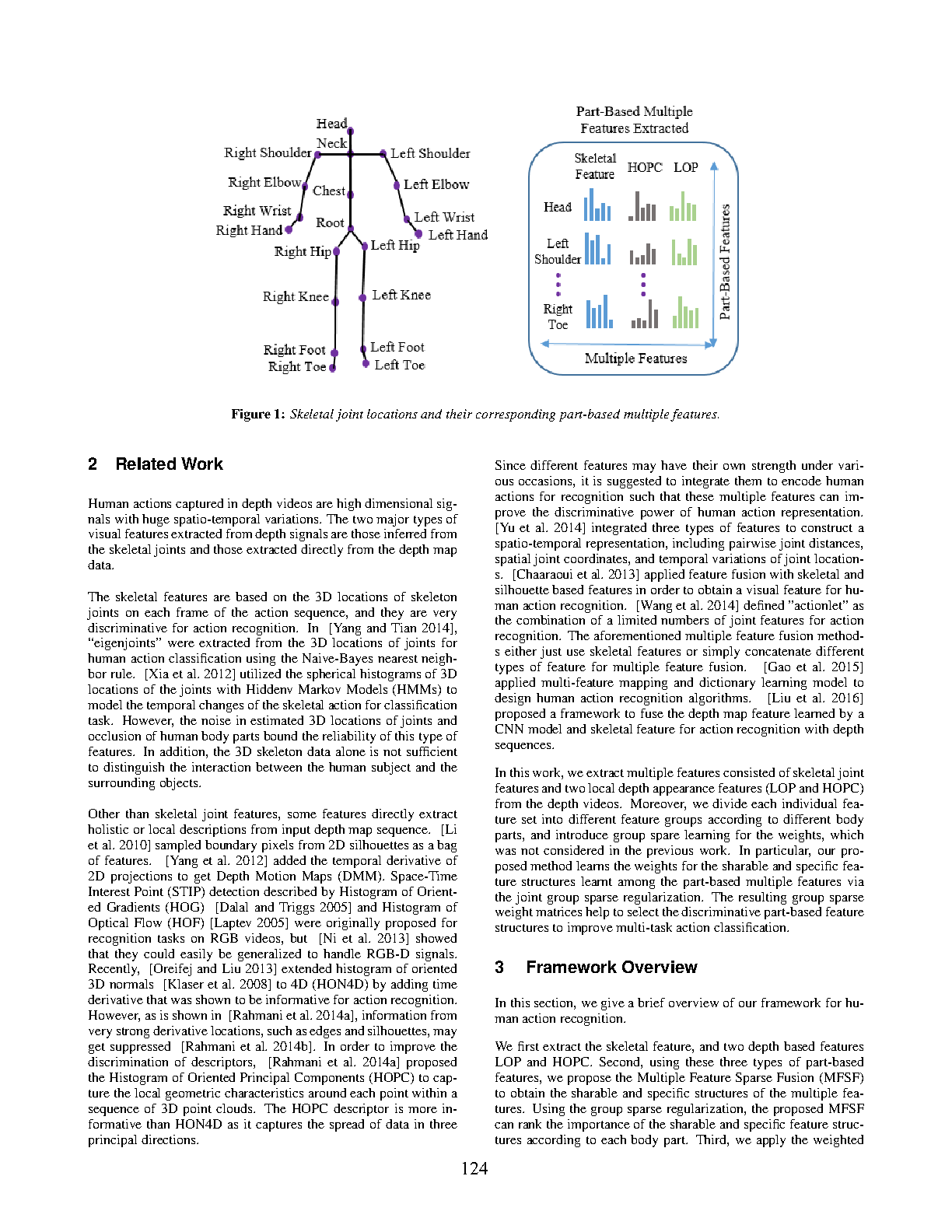

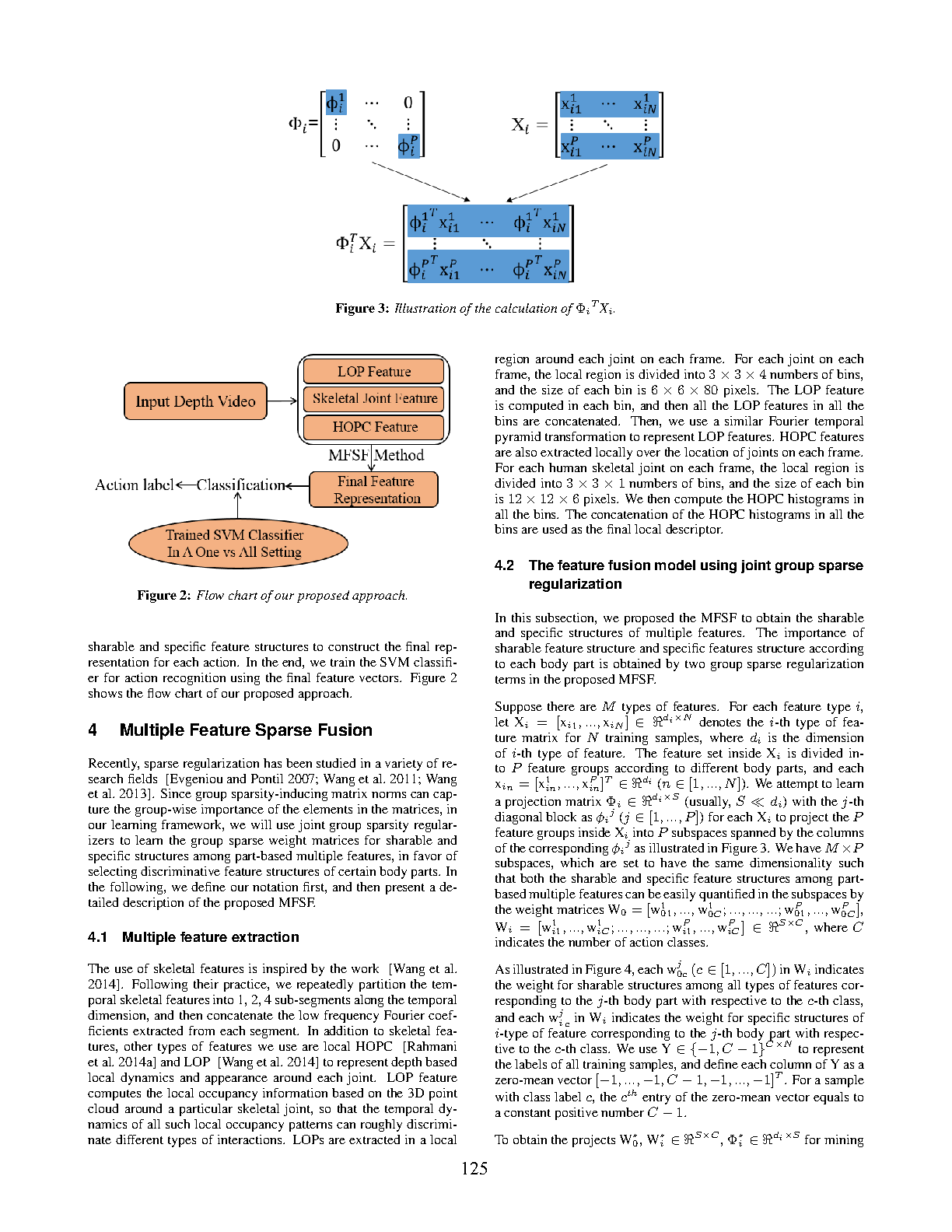

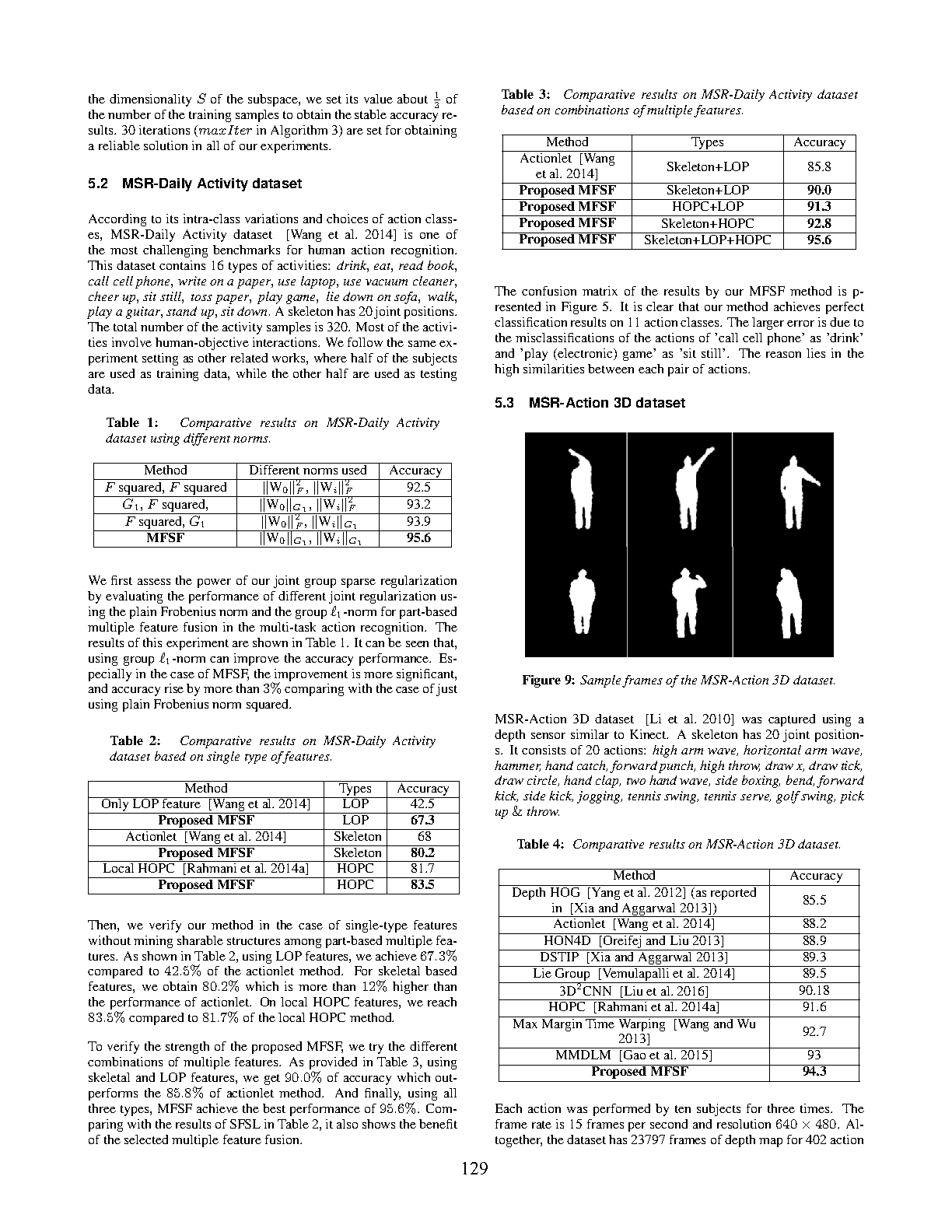

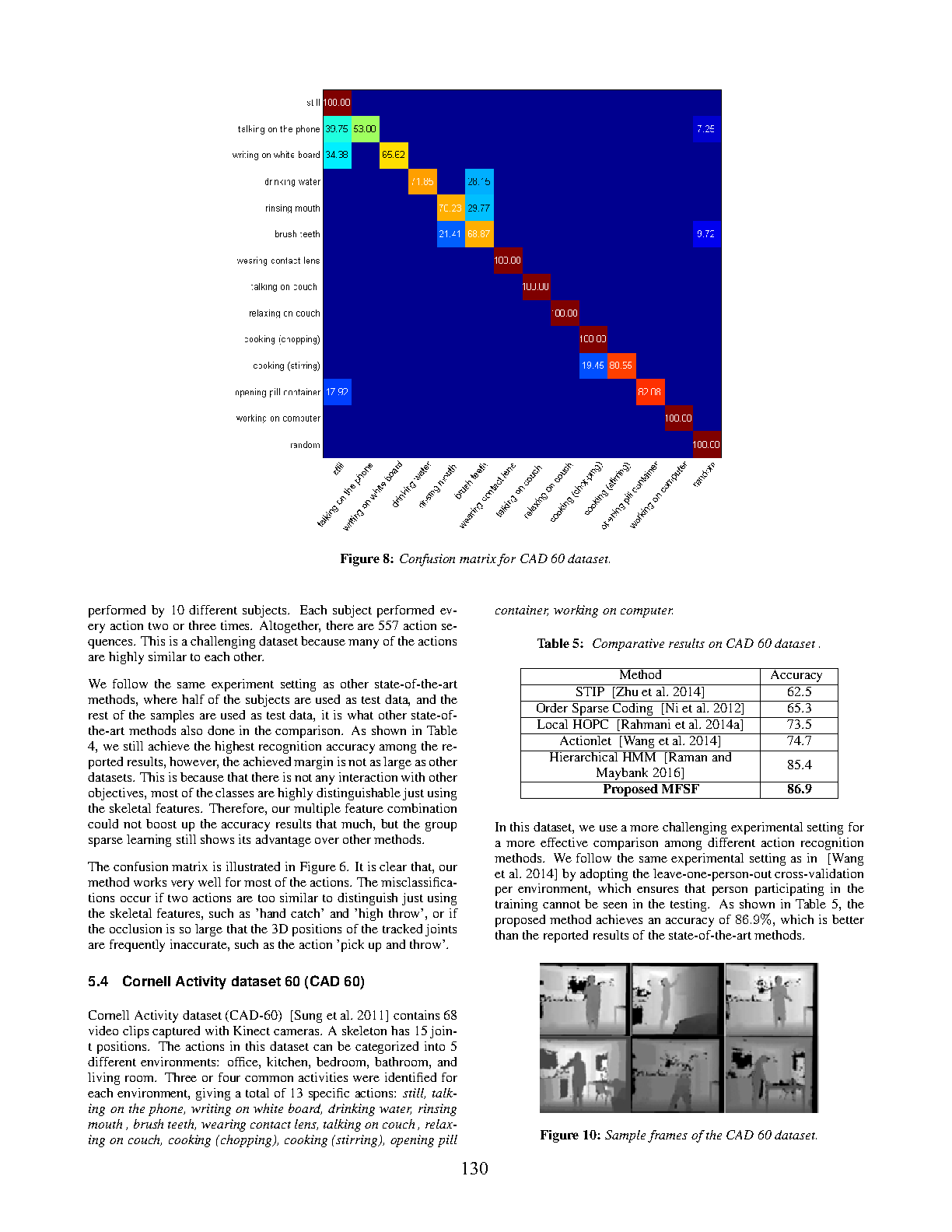

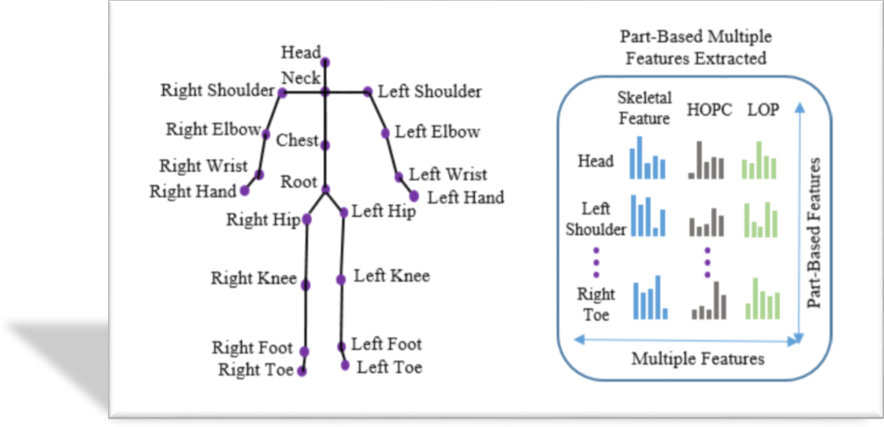

This paper addresses the problem of recognizing human actions captured with depth cameras. Human action recognition is a challenging task as the articulated action data is high dimensional in both spatial and temporal domains. An effective approach to handle this complexity is to divide human body into different body parts according to human skeletal joint positions, and performs recognition based on these part-based feature descriptors. Since different types of features could share some similar hidden structures, and different actions may be well characterized by properties common to all features (sharable structure) and those specific to a feature (specific structure), we propose a joint group sparse regression-based learning method to model each action. Our method can mine the sharable and specific structures among its part-based multiple features meanwhile imposing the importance of these part-based feature structures by joint group sparse regularization, in favor of discriminative part-based feature structure selection. To represent the dynamics and appearance of the human body parts, we employ part-based multiple features extracted from skeleton and depth data respectively. Then, using the group sparse regularization techniques, we have derived an algorithm for mining the key part-based features in the proposed learning framework. The resulting features derived from the learnt weight matrices are more discriminative for multi-task classification. Through extensive experiments on three public datasets, we demonstrate that our approach outperforms existing methods.

Publication

Human Action Recognition via Skeletal and Depth based Feature Fusion by Lining Zhang, Hubert P. H. Shum and Ling Shao in 2018

Proceedings of the 2016 International Conference on Motion in Games (MIG)

Links and Downloads