A Two-Stream Recurrent Network for Skeleton-Based Human Interaction Recognition

Abstract

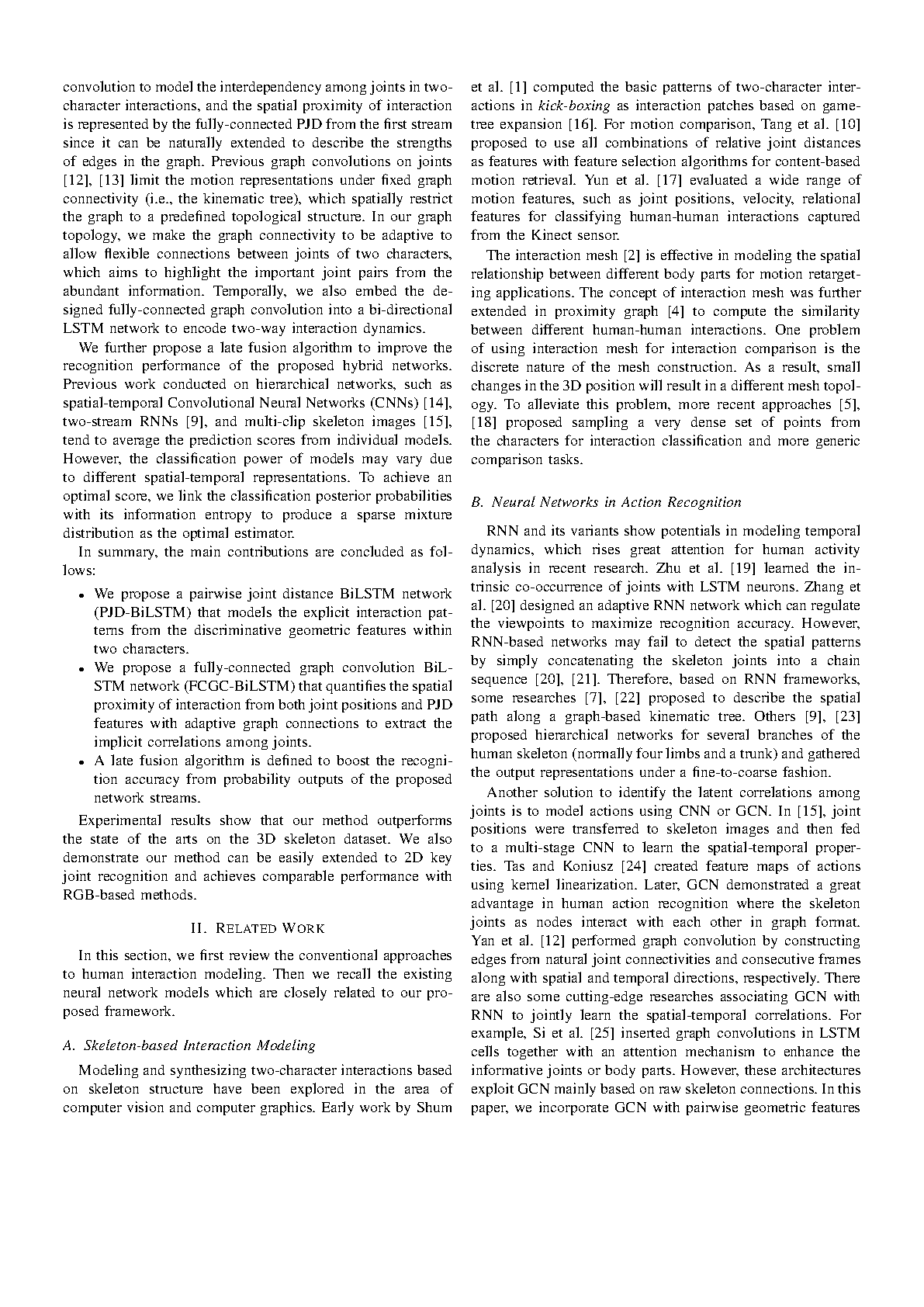

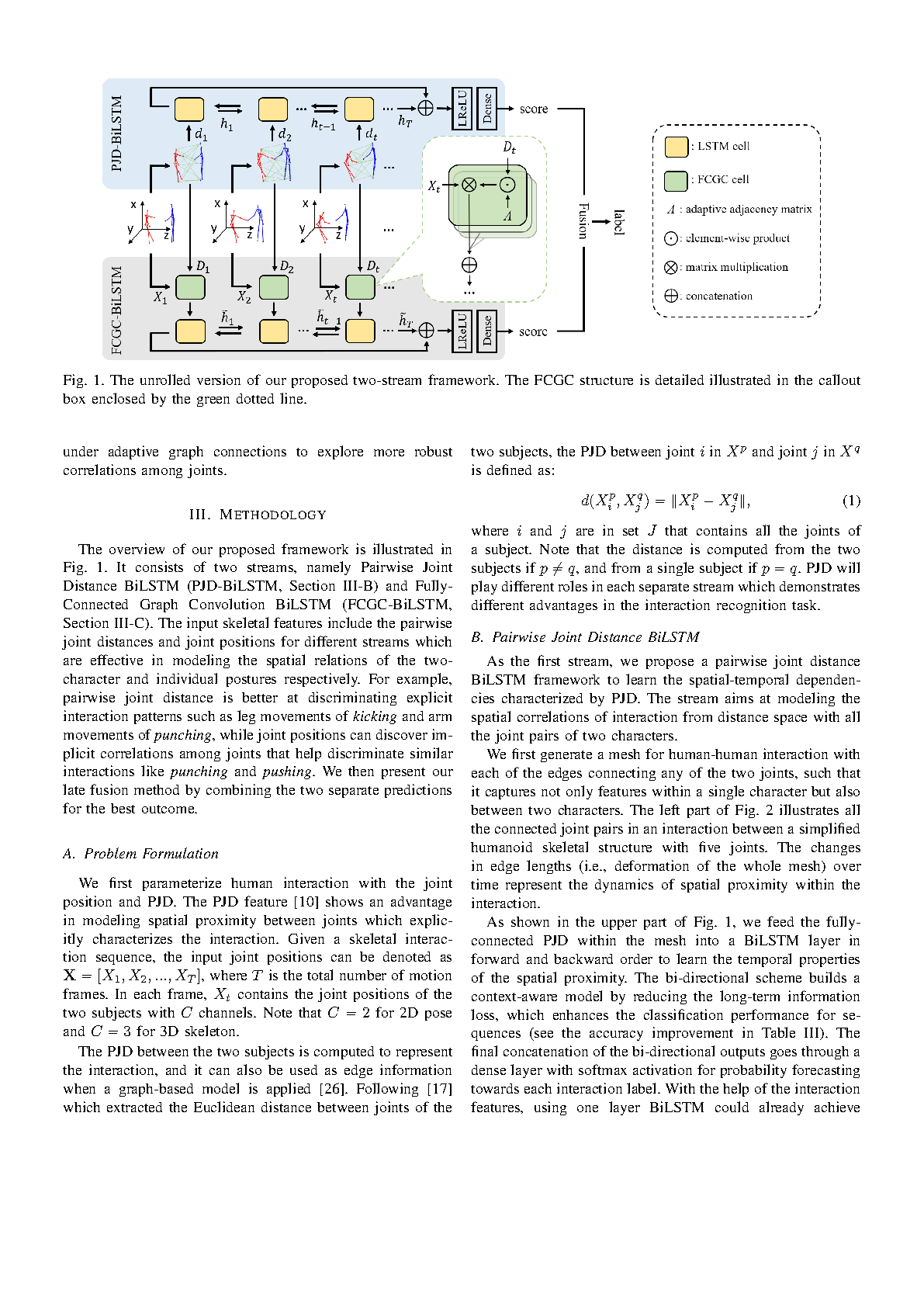

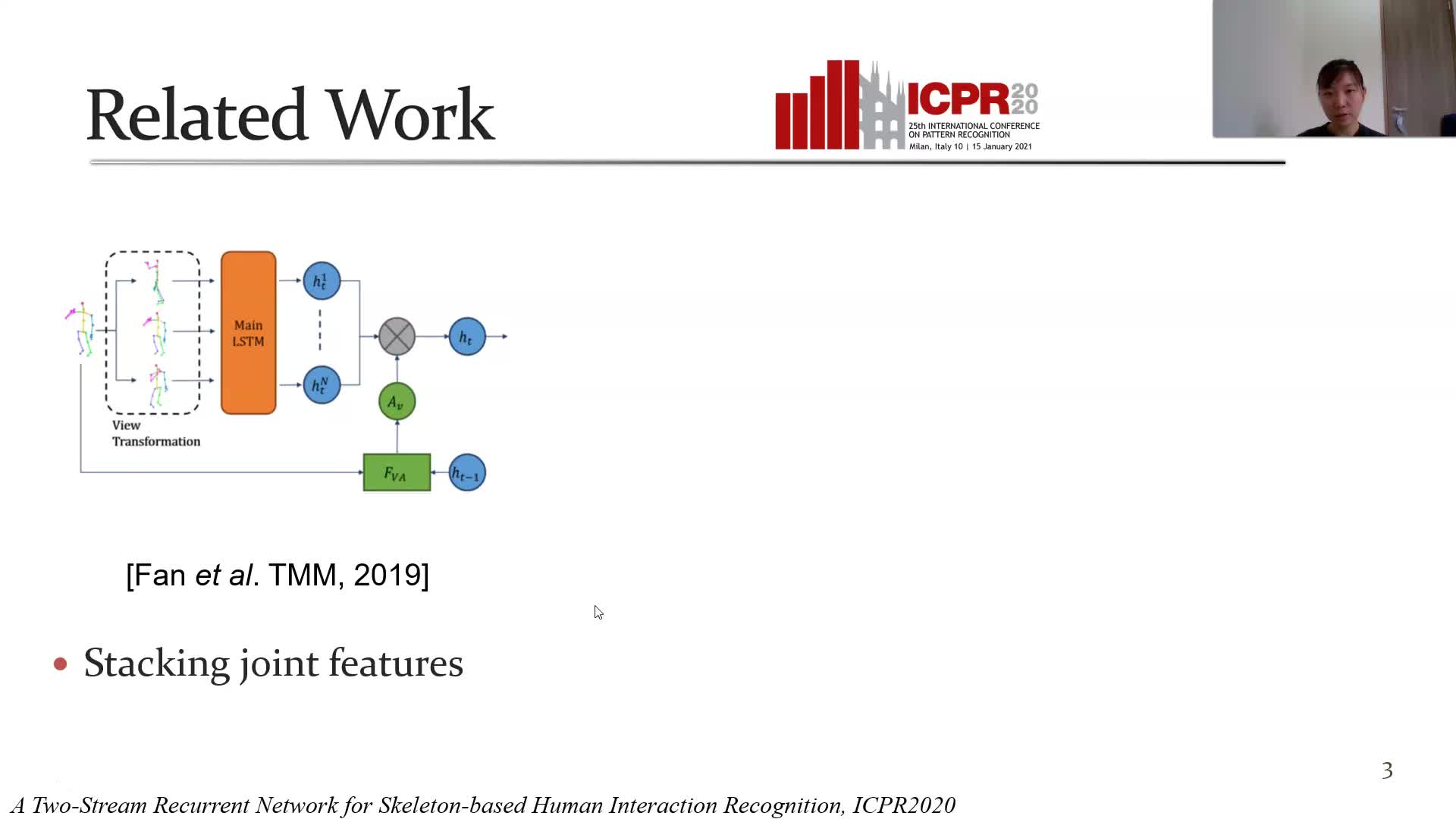

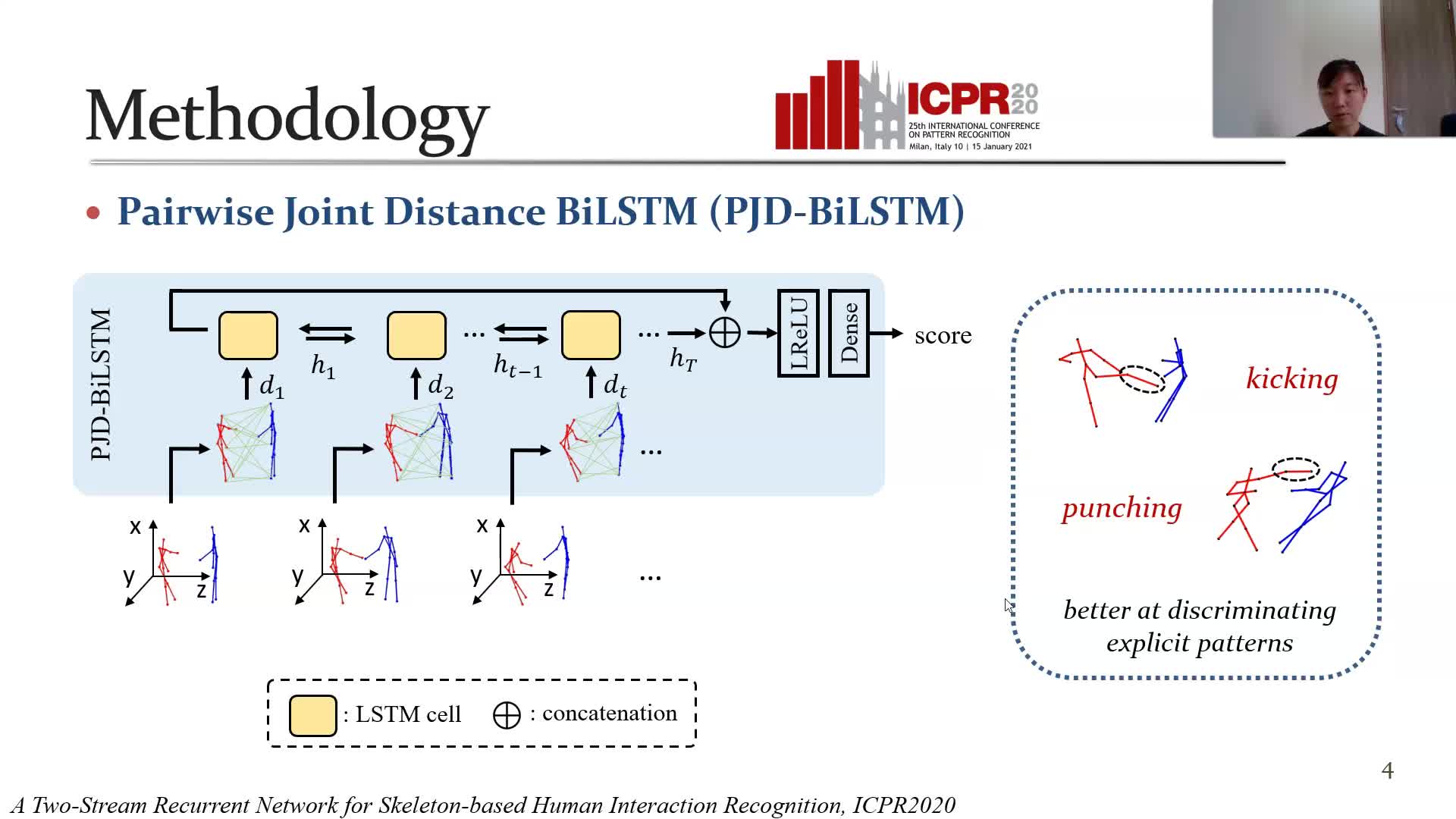

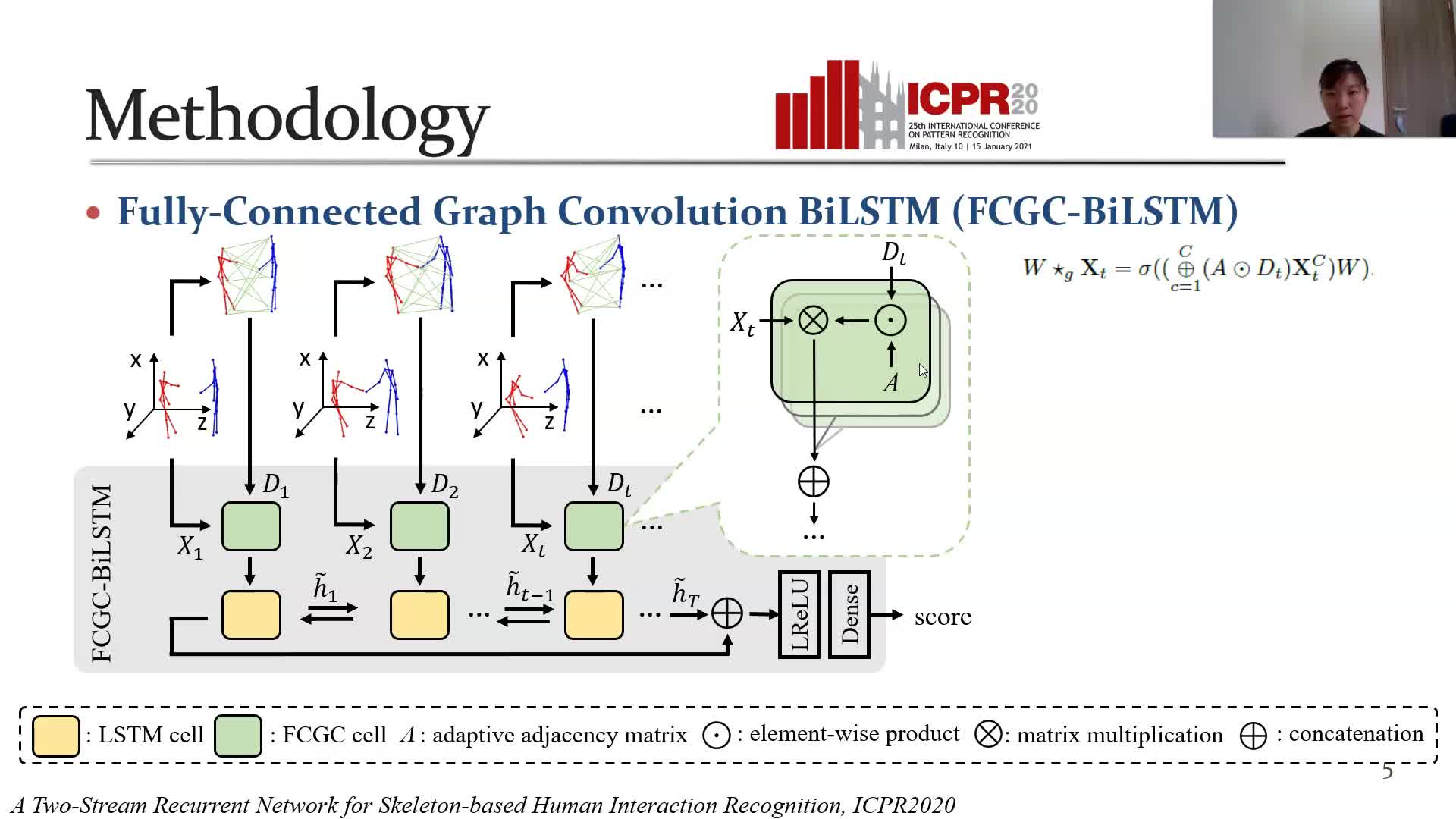

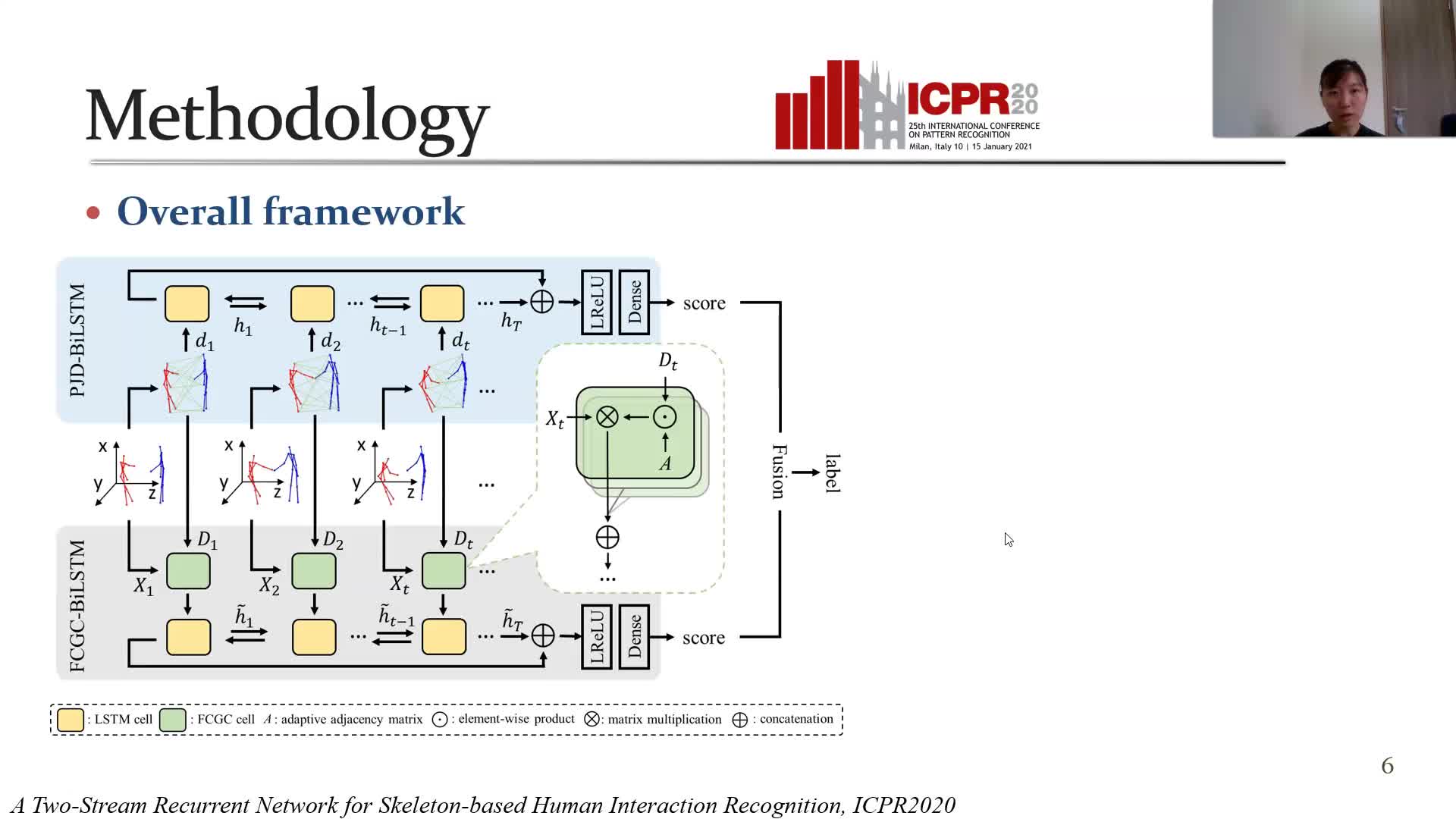

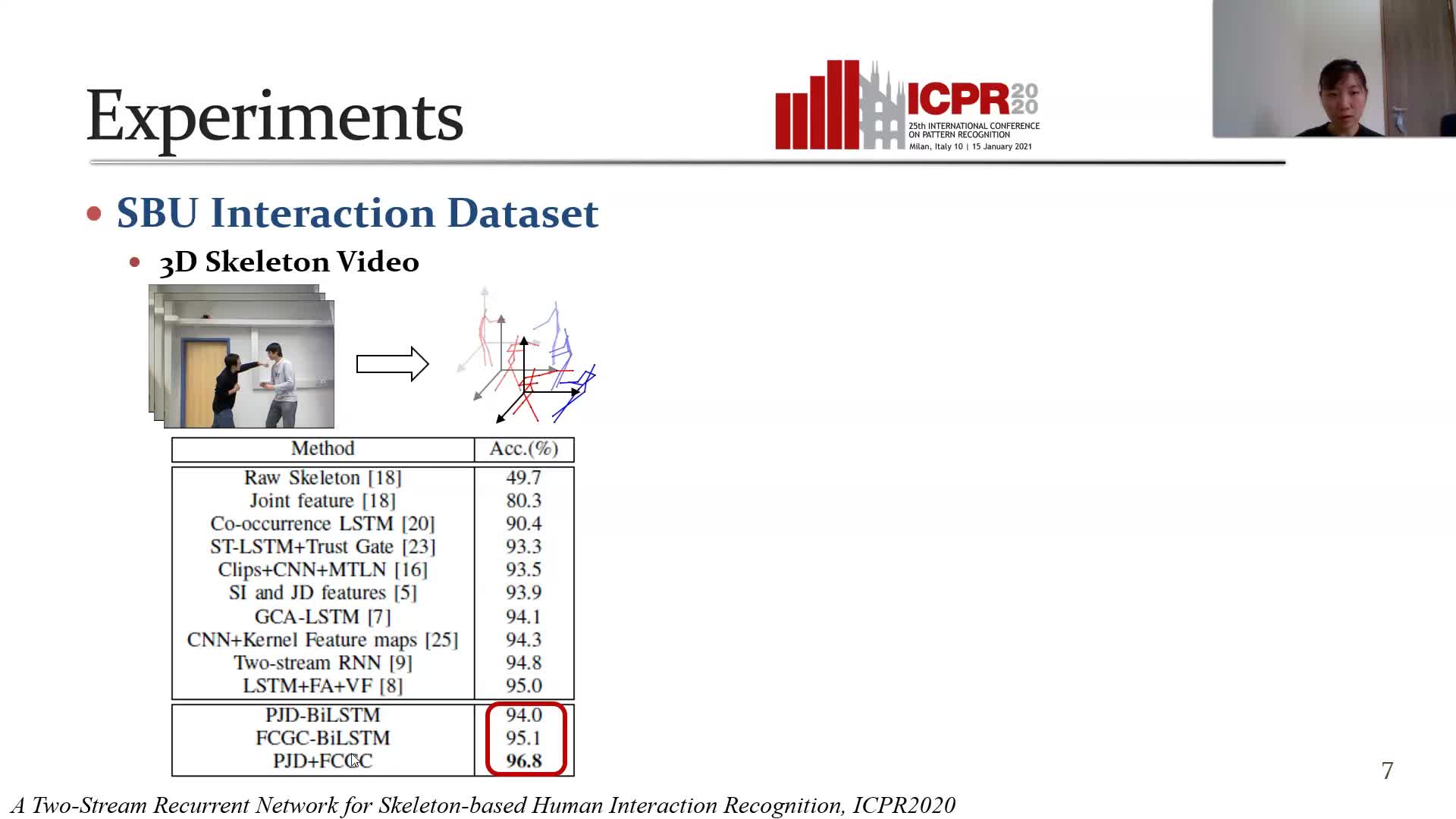

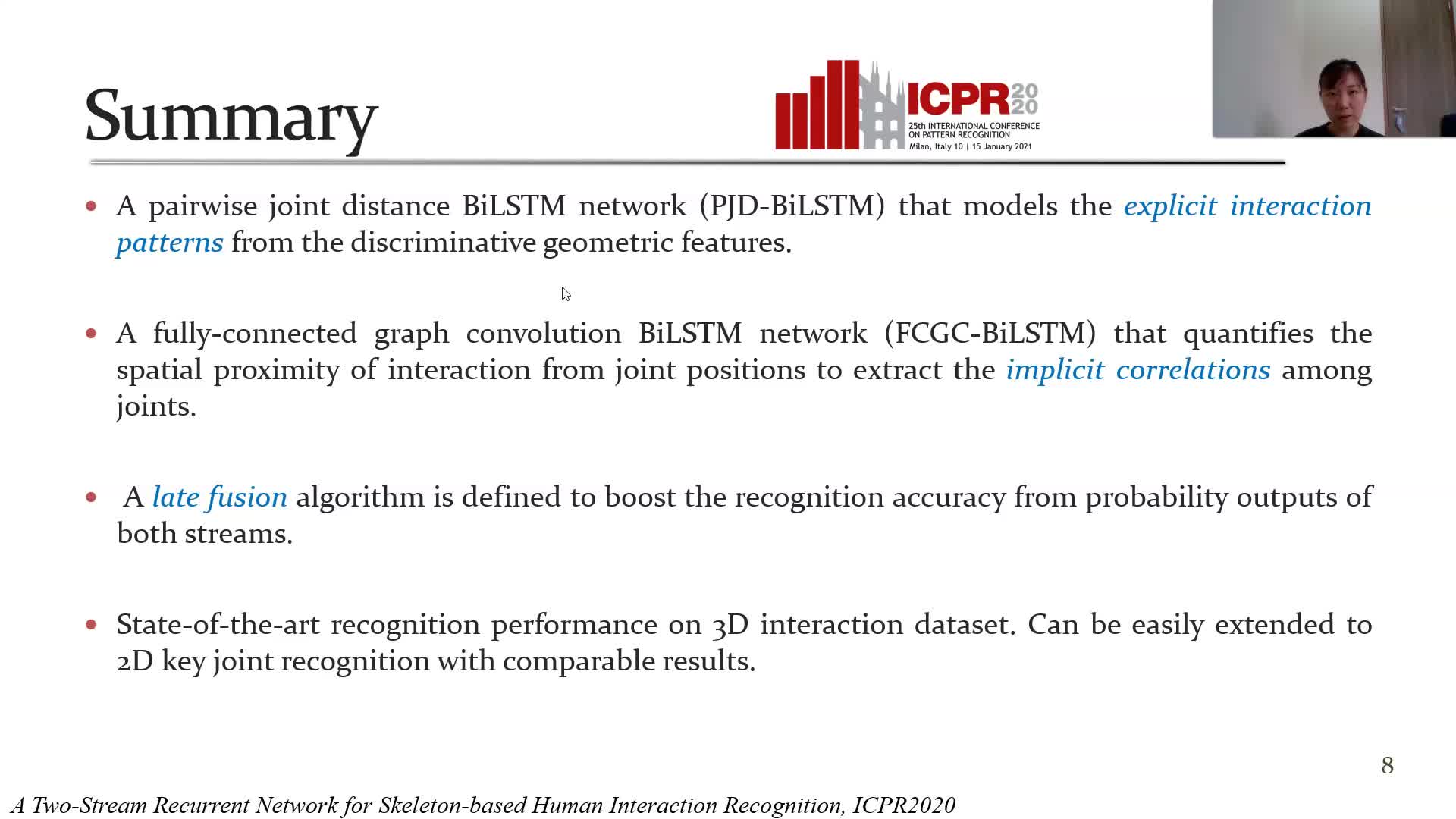

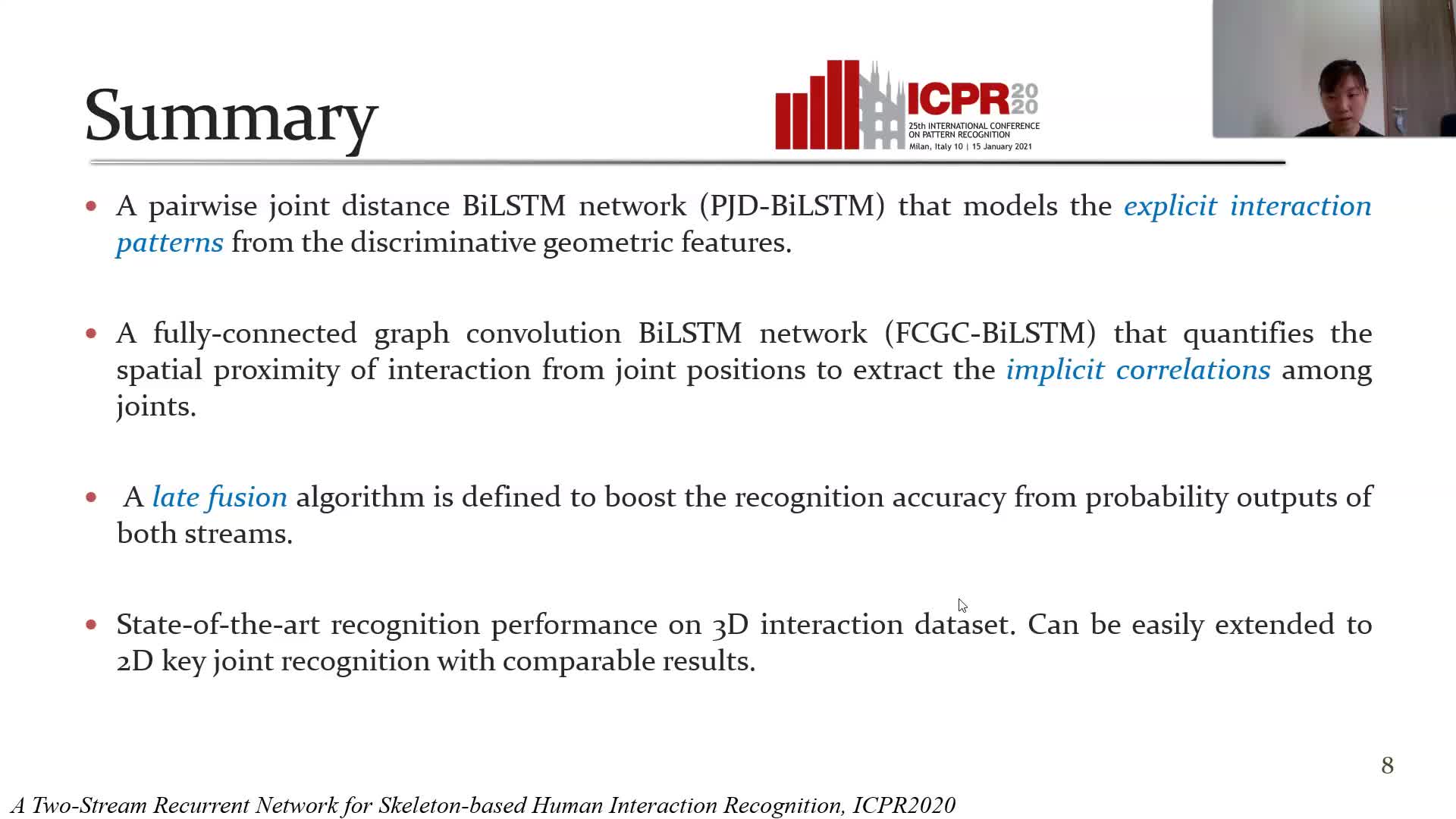

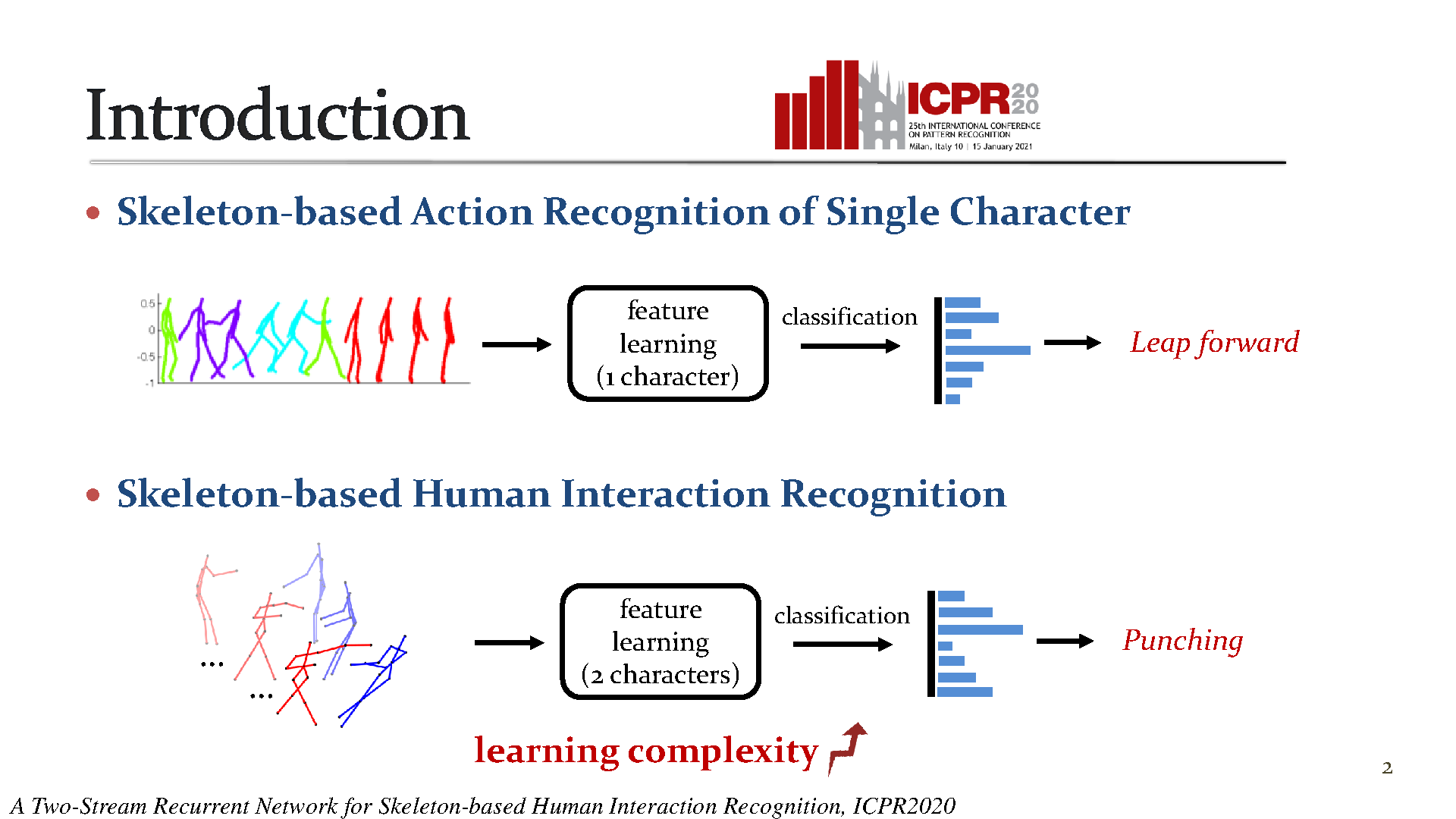

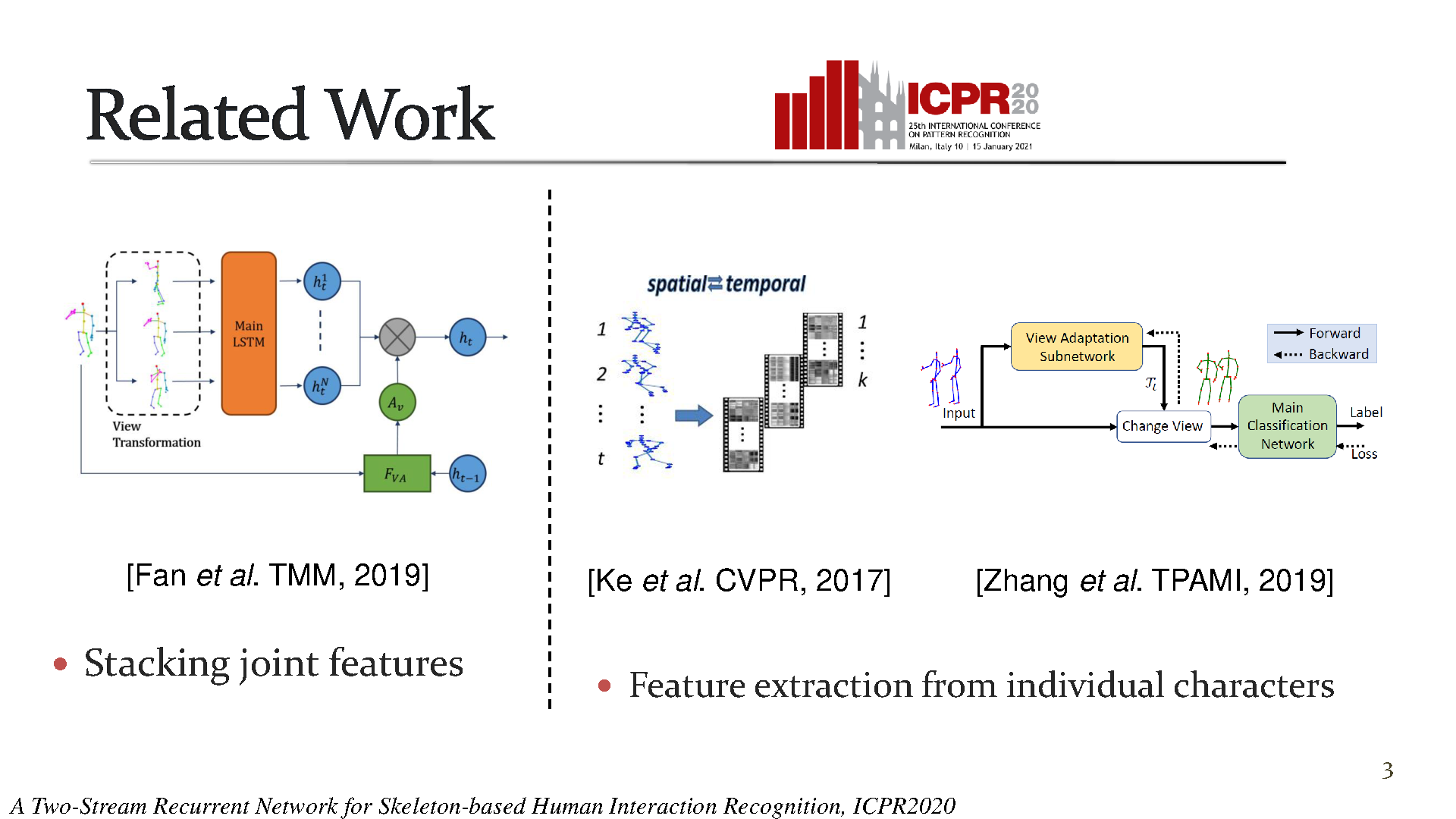

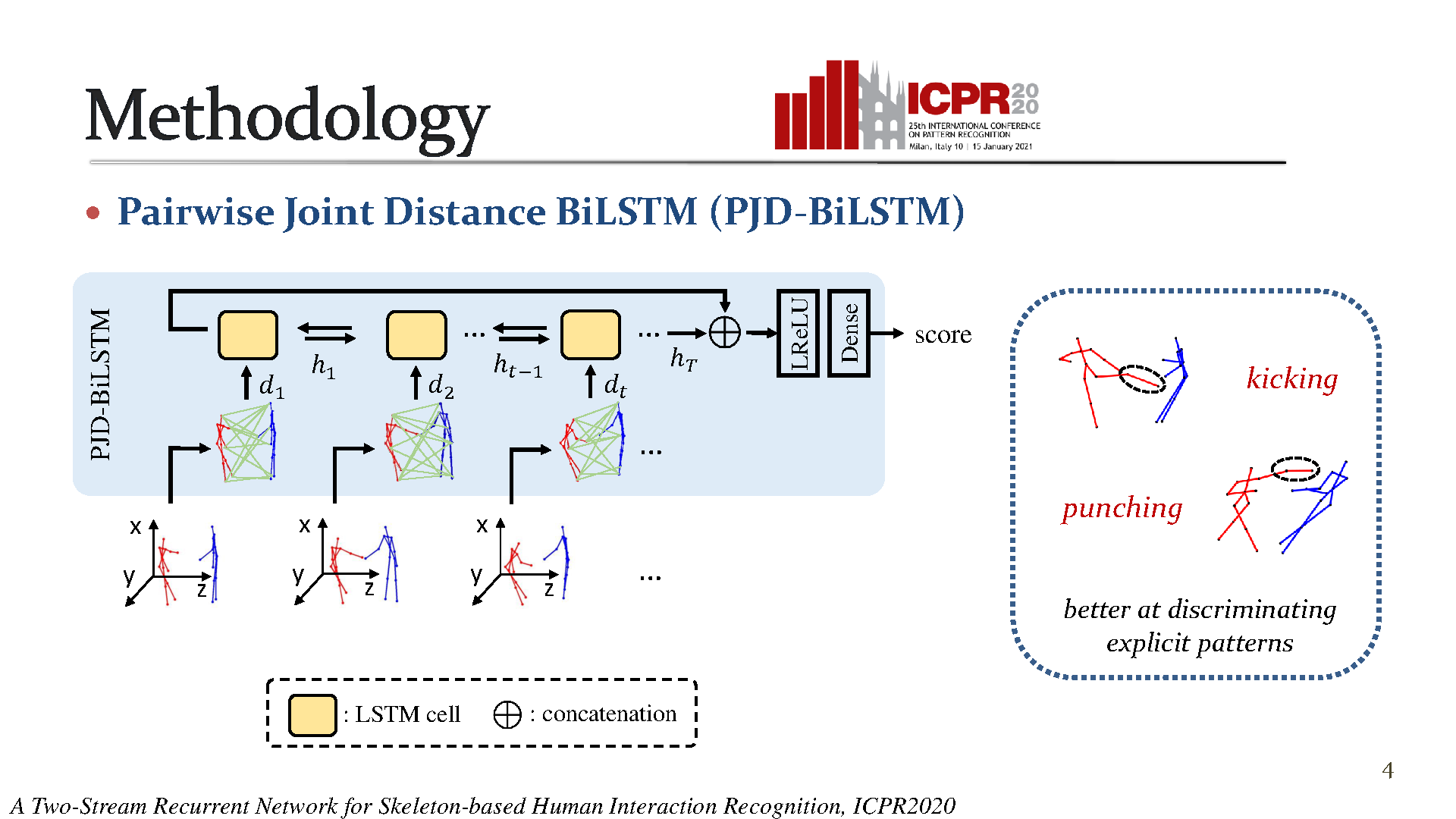

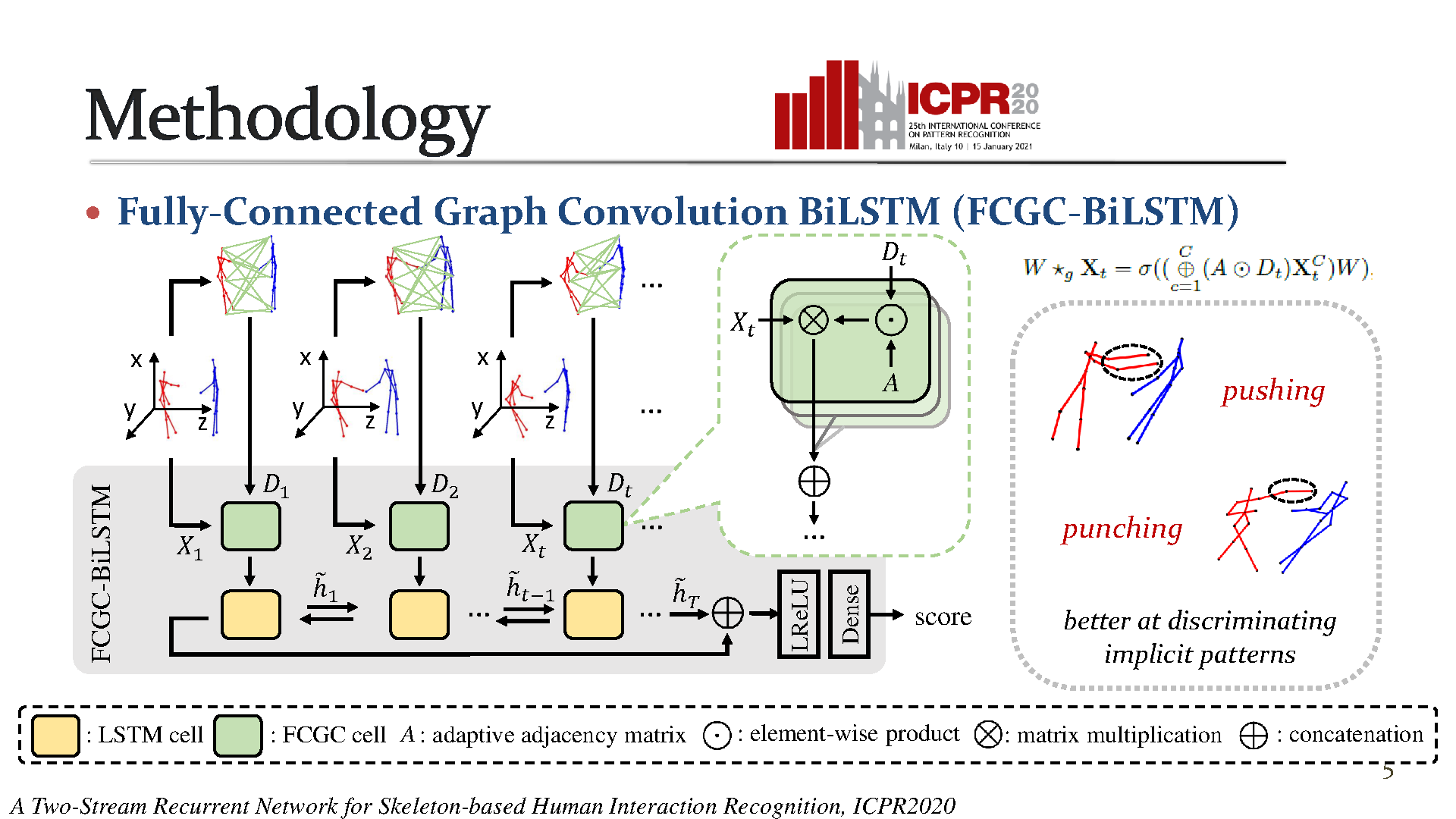

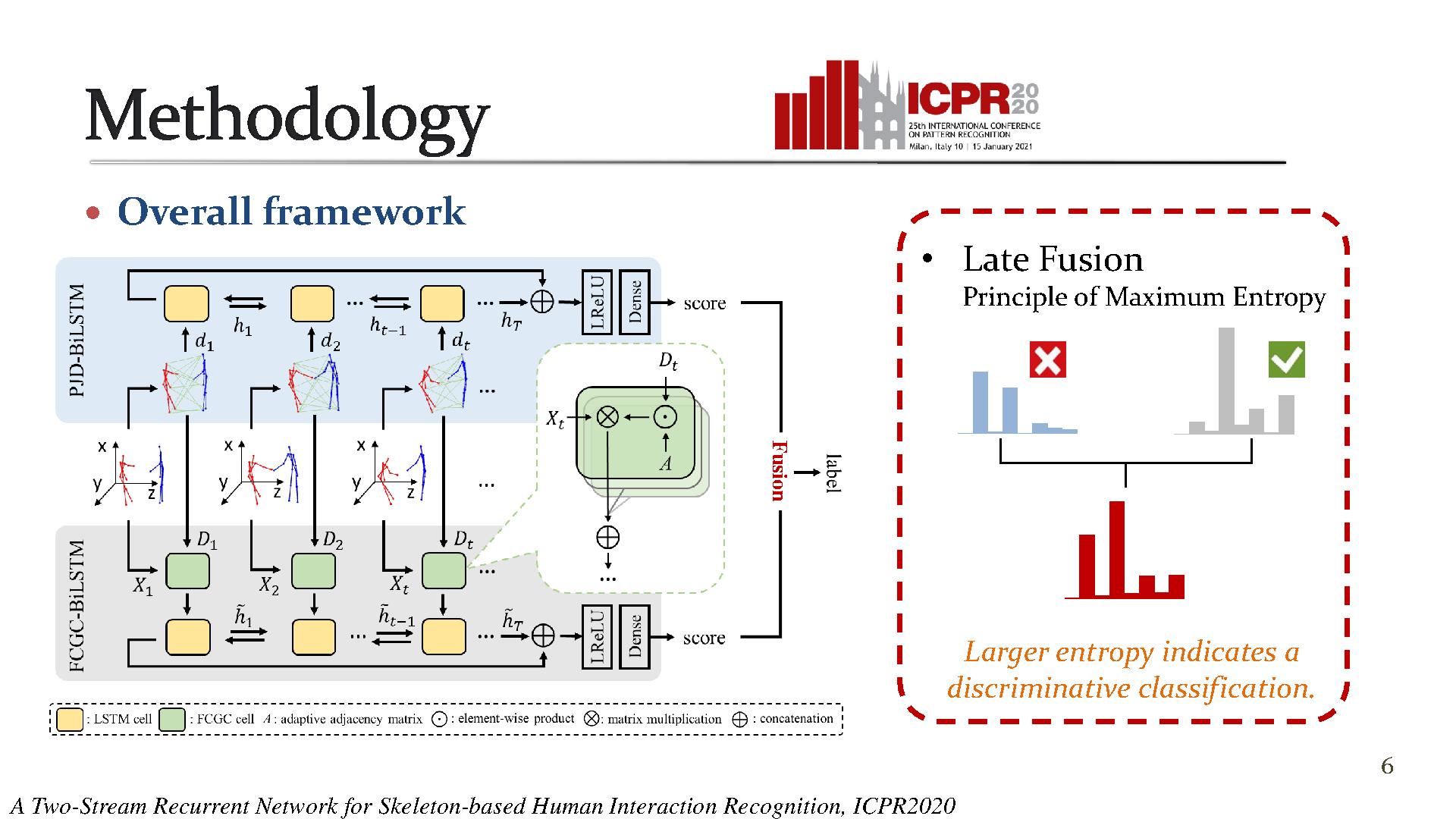

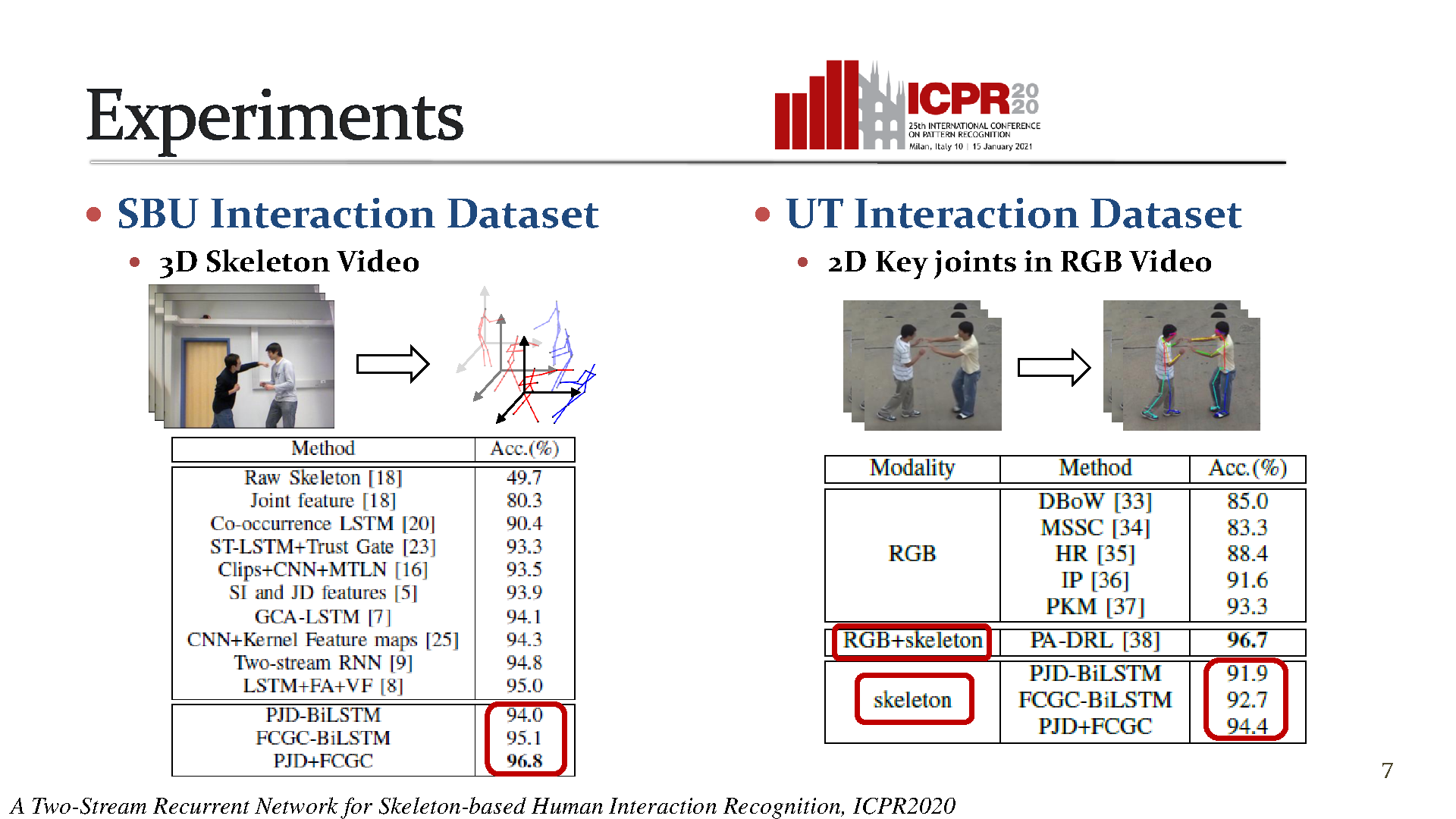

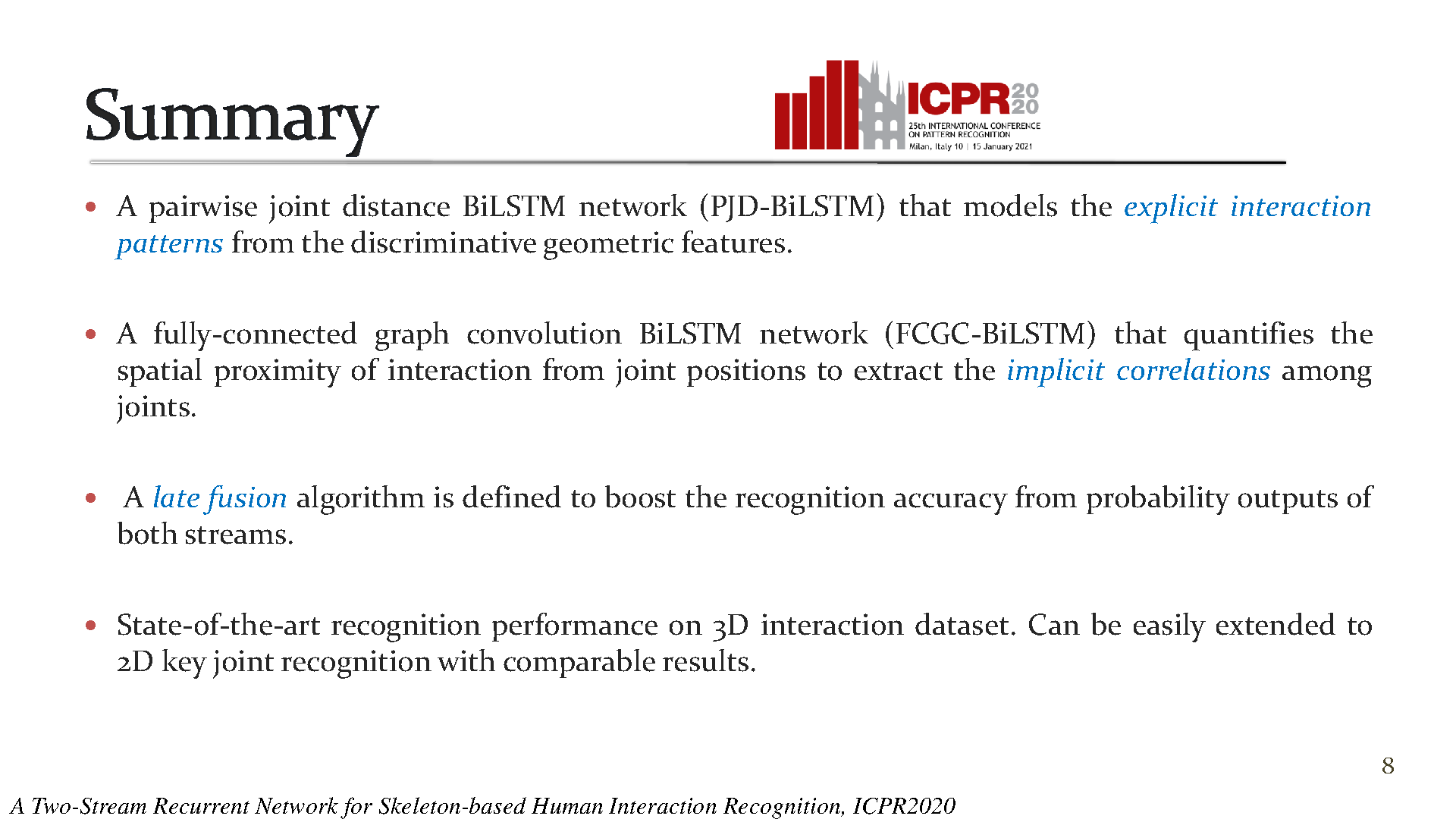

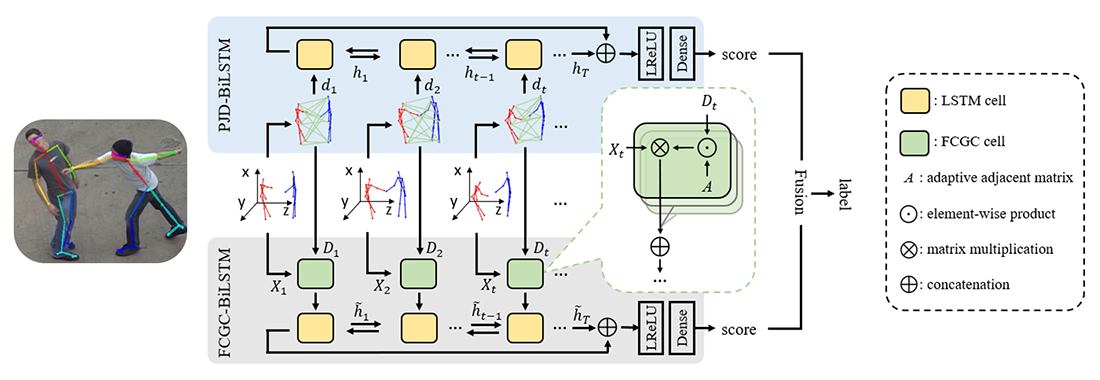

This paper addresses the problem of recognizing human-human interaction from skeletal sequences. Existing works are mainly designed to classify single human action. Many of them simply stack the movement features of two characters to deal with human interaction, while neglecting the abundant relationships between characters. In this paper, we propose a novel two-stream recurrent neural network by adopting the geometric features from both single actions and interactions to describe the spatial correlations with different discriminative abilities. The first stream is constructed under pairwise joint distance (PJD) in a fully-connected mesh to categorize the interactions with explicit distance patterns. To better distinguish similar interactions, in the second stream, we combine PJD with the spatial features from individual joint positions using graph convolutions to detect the implicit correlations among joints, where the joint connections in graph is adaptive for flexible correlations. After spatial modeling, each stream is fed to a bidirectional LSTM to encode two-way temporal properties. To take advantage of the diverse discriminative power of the two streams, we come up with a late fusion algorithm to combine their output predictions concerning information entropy. Experimental results show that the proposed framework achieves state-of-the-art performance on 3D and comparable performance on 2D interaction datasets. Moreover, the late fusion results demonstrate the effectiveness of improving the recognition accuracy compared with single streams.

Publication

A Two-Stream Recurrent Network for Skeleton-Based Human Interaction Recognition by Muhammad Zeeshan Baig, Nauman Aslam and Hubert P. H. Shum in 2022

Proceedings of the 2020 International Conference on Pattern Recognition (ICPR)

Links and Downloads

YouTube